Tune relevancy with little to no coding effort

Deliver relevant results with drag-and drop ease. Simply arrange result assortments or pin, boost, block, or bury specific resources to tune relevancy for particular search terms or category pages.

Drive quicker time to value and higher engagements

Connect employees with assets relevant to their role and responsibilities before they even search for them by using signal-driven recommendations. Optimize page zones to surface trending, frequently searched, or similar content recommendations.

Create and publish page templates with ease

Independently arrange, save, and publish page layouts without any help from IT. Take advantage of an interactive template manager to power your knowledge teams so they can creatively optimize resource experiences.

Knowledge management features

Increase findability and productivity by making knowledge discovery more personal.

Recommendations

Analyze and aggregate user behaviors and signal tracking to deliver AI-powered recommendations.

Search relevancy

Boost and block documents like holiday schedules and HR policies when that information is in high demand (or out of date).

Expert finder

Allow employees to quickly find colleagues with the expertise they need to know to get the job done and keep tribal knowledge from getting lost.

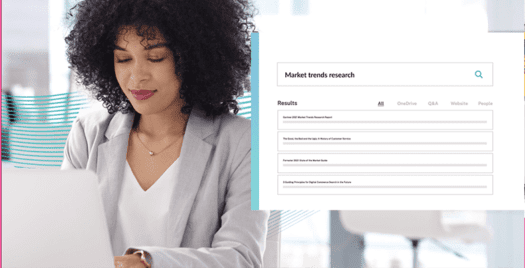

Unified view of content

One search across all internal data sources, including intranet network drives, knowledge bases, cloud storage, and data from systems of record.