What Is Natural Language Search?

Tips and tricks to learn about natural language search and how you can get more value out of the applications search incorporates.

Natural language search is the insane idea that maybe we can talk to computers in the same way we talk to people. Absolutely nuts, I know.

With the increasing popularity of virtual assistants like Siri and Alexa, and devices like Google Home and Apple’s Homepod, natural language search is ready for prime time in the devices in our homes, our offices, and in our pockets.

Alexa, Siri, and Google Home are Search Apps

All these devices and virtual assistants making their way into our homes and hearts have search technology at their core. Any time you query a system or database or application and the system has to decide which results to display – or say – it’s a search application. OpenTable, Tinder, Google Maps are all search-based applications. Search technology is at the core of nearly every popular software application you use today at work, at home, at play, at your desk, or on your smartphone.

But how you interact with these systems is changing.

The Annoyance of Search

In the old days, if you were searching a database or set of files or documents for a particular word or phrase, you’d have to learn a whole arcane set of commands and operators.

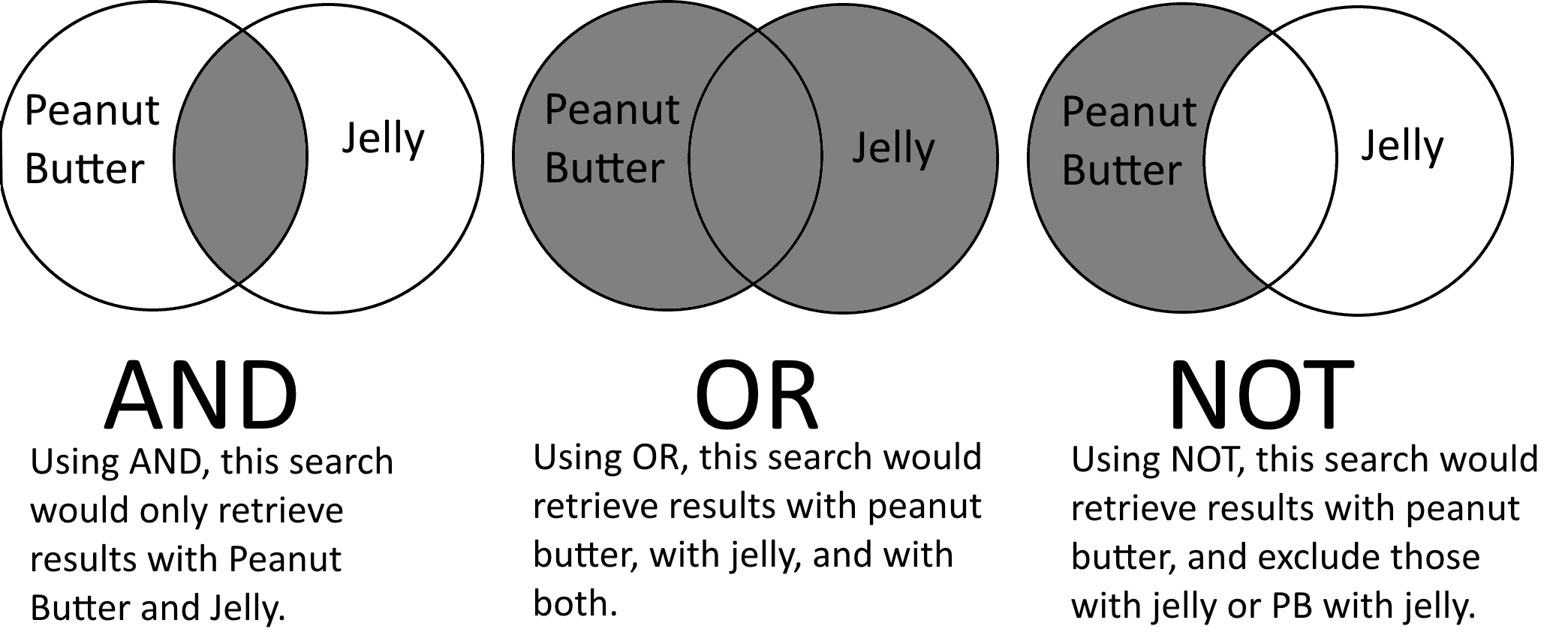

Example of Boolean operators that are used by databases and applications all over the world, diagram courtesy of Slipper Rock University.

Boolean in a Nutshell

You’d have to know Boolean operators and other logic so you could search this table WHERE this word AND that word OR that other word appear but NOT this other word and then SORT by this field. (Got it?)

Each system had its own idiosyncrasies that only experienced users would know and you might have to run queries and reports multiple times to make sure you got the right results back – or to make sure you got all the results possible. You’d have to know the structure of the database or data set you’re querying and which fields to look at.

These requirements for using search systems put barriers to entry for people wanting to find information to do their jobs at work or trying to do research at a library. You’d have to ask a specialist who knew the ins and outs of each system and wait for them to run the report or query for you and print out the results (and hoped they answered the question you originally had).

Evolution of Natural Language Search

You couldn’t just say out loud what you wanted to know – in everyday language – and then have it delivered to you instantly.

But what if you could? That’s natural language search. Here’s a few examples of these types of search queries:

“What was last year’s recognized revenue from the AIPAC region?”

“What restaurant was it Mary mentioned last week in text message?”

“Who hosted the highest rated Oscars telecast ever and what year was it?”

And the system takes that request whether spoken or typed into a box, takes it apart, figures out what you’re looking for, what you aren’t, where you’re searching, and what to include and turns it into the query that it can submit to a database or search system in order to return the results right back to you.

The Technology Behind Natural Language Search

On the backend of things there’s several bits of technology at play.

Let’s say you ask your favorite nearby listening smart device:

What band is Joe Perry in?

First the device wakes up and records an audio file. The audio file gets sent across the internet and is received by the search system.

The audio file is processed by a speech-to-text API that filters out background noise, analyzes it to find the various phonemes, matches it up to words and converts the spoken word into a plain English sentence.

This query gets examined by the search system. The search engine uses natural language processing (or NLP) to analyze the query and notices there’s a proper name in two words in the sentence: Joe Perry. Picking out these various people, places, and things from a data set, collection of files, or group of text is called named entity recognition and is a pretty standard feature for most search applications.

So the system knows that the words Joe Perry refers to a person. But there might be several notable Joe Perrys in the database so the system has to resolve these ambiguities.

Probably not the Joe Perry you’re looking for.

There’s Joe Perry the NFL football player, Joe Perry the snooker champion (totally not kidding), Joe Perry the Maine politician, and Joe Perry the popular musician. The word band in the query alerts the system that we’re probably looking for careers associated with a band like a composers, musicians, or singers. That’s how it disambiguates which Joe Perry we’re looking for.

A database of semantic information about musicians might have information about Perry’s songs, career, and yes the bands he’s been a part of during his career. Then search engines use NLP technology to better understand user intention, it’s called semantic search.

The system takes apart the sentence, sees the user is asking for a band associated with Joe Perry. It looks at a database of musicians, performers, songs, albums, and bands. It sees that Joe Perry is semantically associated with several bands but the main one is Aerosmith.

The query has been spoken, converted to text, turned into a query, sent to the system and it comes back with the answer that Joe Perry is in a band called Aerosmith along with related metadata that he’s been in Aerosmith since it formed in 1970. The system puts this answer together into a sentence.

It ships that sentence off to text-to-speech API to piece together the words into a sentence that sounds like a human being. Sends that audio file back to the device and it answers back:

Joe Perry has been in the band Aerosmith since 1970.

The question is asked just like you’d ask a human being — and answered in exactly the same way (and correctly).

Natural language search reduces the barriers to information and access to enhance our lives during work or play or when trying to settle a bar bet over a piece of pop culture. When users can talk to devices just like they talk their friends, more people can get more value out of the applications and services we build.

Top image from 1986’s Star Trek IV: The Voyage Home where Scotty tries to talk to a 1980s computer through its mouse (video).

Want More on Natural Language Search?

Level Up: Highly engaging 3-min video on NLU vs NLP vs NLS

LEARN MORE

Contact us today to learn how Lucidworks can help your team create powerful search and discovery applications for your customers and employees.