Benchmarking the new Solr ‘Near Realtime’ Improvements.

I’ve been working on integrating Solr into the Lucene benchmark module, and I’ve gotten the code to the point of being able to run some decent Solr NRT tests. I recently worked on re-architecting the Solr UpdateHandler as well, and I’m keen to look more deeply at some of the results of those changes. The updates to the UpdateHandler provided a series of benefits, most of which significantly improve Solr’s ability to do NRT without using clever (and usually complicating) workarounds. I likely still have some things to check, some I’s to dot and T’s to cross, but I thought I’d share an early look of my investigation into performance changes.

To see how the recent changes have affected Solr’s performance, I decided to compare the most recent version of Solr trunk with a version from right before the UpdateHandler changes went into Solr trunk. I took the algorithm for NRT testing in Lucene and started tweaking it for use with Solr. I used my Intel Core 2 Quad @ 2.66 GHz for my test. It’s getting old, but it can still move a few bits. The results of this investigation follow.

The Process

So the first thing I did was check out the version of Solr that I wanted (revision 1141518) as well as the latest version of Solr trunk (at the time, revision 1144942). I then applied my Solr benchmark patch to each checkout and put together the following benchmark algorithm:

{

StartSolrServer

SolrClearIndex

[ "PreLoad" { SolrAddDoc > : 50000] : 4

SolrCommit

Wait(220)

[ "WarmupSearches" { SolrSearch > : 4 ] : 1

# Get a new near-real-time reader, sequentially as fast as possible:

[ "UpdateIndexView" { SolrCommit > : *] : 1 &

# Index with 2 threads, each adding 100 docs per sec

[ "Indexing" { SolrAddDoc > : * : 100/sec ] : 2 &

# Redline search (from queries.txt) with 4 threads

[ "Searching" { SolrSearch > : * ] : 4 &

# Wait 60 sec, then wrap up

Wait(60)

}

StopSolrServer

RepSumByPref Indexing

RepSumByPref Searching

RepSumByPref UpdateIndexView

This algorithm will start up the Solr example server (I’m using out of the box settings for this test), clear the current index, and then load 200,000 wikipedia docs into the index. Not a large index by any stretch, but it will help let us see the time affects due to various commit and merge activities well enough to make some simple judgements. After committing, the algorithm then waits 220 seconds – this is required on the latest trunk version because commits no longer wait for background merges to complete – so we wait long enough for those merges to complete and not interfere with the benchmark. This is not necessary on the older version – that commit call will wait until the background merges are finished to return.

Next we do 4 searches to warm up the index just a bit before starting a background thread that will continuously call commit sequentially, as fast as possible. Then we start two more background threads, each adding wikipedia documents at a target rate of 100 docs per second. Then we start 4 background threads that each query Solr as fast as possible. We continue this barrage for a minute and then look at the results.

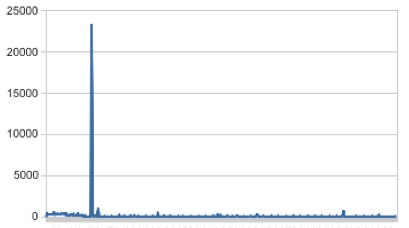

The Before Picture

This is a graph of the “refresh” times – the time it took to perform each commit and open up a new view on the index. In this case, the index was refreshed 400 times in the minute we allowed the benchmark to run for. For the most part, the refresh time really does not look too bad. The average “refresh” time is actually just 150ms. Now that Lucene and Solr work mostly per segment, this process can naturally be pretty fast. And this is a pretty small index really. There is a troubling spike in this minute though – one “refresh” time took about 23 seconds! The reason for this is that the commit triggered background merges, and Solr waited for those background merges to finish before opening a new IndexSearcher and releasing the commit lock. It gets worse though – not only was the refresh time hurt, but while that commit lock was held, neither of our 2 indexing threads could get a document into the index! They were effectively stalled. Over that minute, we were only able to index the wikipedia documents at 13.91 documents per second. Far below our target hopes of 100 documents per second for each thread! Also, there was a very large block of time were no indexing happened at all. Less troubling, our 4 threads were able to query at a rate of 11.24 queries per second (this can likely vary wildly depending on the ‘challenge’ of the queries.txt file) [UPDATE 9/4/2011: the search rate is very low due to a problem with the initial benchmark – many queries ended up malformed – without so many errors, search performance jumps drastically]. But overall, this is not an optimal use of this desktop’s resources.

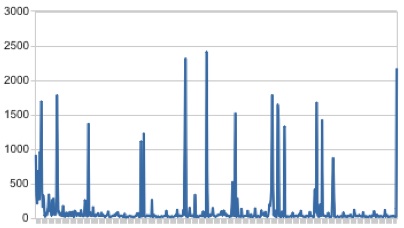

The After Picture

Now we try with the new UpdateHandler. The new UpdateHandler no longer blocks updates while a commit is in progress. Nor does it wait for background merges to complete before opening a new IndexSearcher and returning.

The results are not bad – a low average refresh time of 116.74 ms, but also no 23 second spike. There are still spikes, but they are not too frequent, and stay below 2.5 seconds at worst. Micro spikes.

Even better though, our indexing rate is now 125.48 documents per second (vs 13.91 before). This is a fantastic increase – and likely absent large gaps of no indexing activity. The search performance dropped to 2.8 queries per second (from 11.24), but no doubt this is largely because of all the additional indexing activity that was able to take place. There was a lot more work which the CPUs could now do that they couldn’t before; since the indexing threads soaked up more CPU resources, queries were allocated fewer resources.

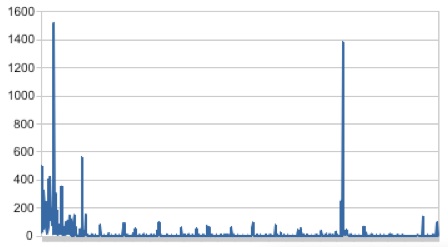

The After Picture With Lucene NRT

While I was changing around the UpdateHandler, a simple natural extension was too allow the use of Lucene’s NRT feature when opening new views of the index. This feature allows you to skip certain steps that a full commit performs. The tradeoff is that nothing is guaranteed to be on stable storage, but the benefit is very fast “refresh” times.

While I was changing around the UpdateHandler, a simple natural extension was too allow the use of Lucene’s NRT feature when opening new views of the index. This feature allows you to skip certain steps that a full commit performs. The tradeoff is that nothing is guaranteed to be on stable storage, but the benefit is very fast “refresh” times.

To take advantage of this in Solr, we added a new concept that I called a ‘soft’ commit. A soft commit returns quickly, but does not commit documents durably to stable storage. You must also occasionally call ‘hard’ commits to commit to stable storage. A ‘soft’ commit will refresh a SolrCore’s view of the index however.

You can now also setup a ‘soft’ auto-commit in solrconfig.xml. So you might, for example, set up a soft commit to occur every second or so, and a standard commit to occur every 5 minutes.

To test the new ‘soft’ commit feature, I changed the background commit line in the algorithm to:

[ “UpdateIndexView” { SolrCommit(soft) > : *] : 1 &

and ran the benchmark again.

Looking at the graph, it looks like the micro spikes are perhaps a little less frequent – more importantly though, the average “refresh” time has dropped from about 117ms to just 49ms. In the old case we were able to refresh the view 6.67 times in a minute – in the new case without soft commit it was 8.56 times per minute – and in the new case *with* soft commit, we were able to refresh the index view 22.18 times in a minute. We were also able to still index at 130 documents per second while running 3.64 queries per second.

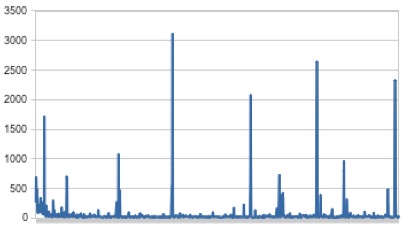

One More Benchmark

Let’s try one more interesting benchmark. We tried to index at 200 documents per second in the previous tests – which my poor machine could not deliver on. So really, we just maxed out on indexing. In this test, I set the indexing rate to something achievable, rather than something that completely swamps the cpu – and looked at the results. This benchmark again used ‘soft’ commits.

Thing’s are looking pretty nice – an average of only 7.5 ms per refresh. That is a refresh rate of 132.6 times per second. Queries per second have also risen to nearly 6 per second from a little over 3 and half. This is an interesting result, and shows that there is still some interesting investigating to do with various settings and algorithm changes.

Conclusion

It looks like NRT in Solr has taken a nice step forward and these improvements will be available in Solr 4.0. There is still more to do though – certain features, such as faceting and function queries (edit: when you use ord), do not all yet work per segment. This means that using them can require more time than you might like when ‘refreshing’ the index view. Eventually we hope to improve most of those cases even further – but even when using those features, in many cases, these changes will still allow you to significantly decrease your indexing to search time latency without resorting to clever tricks like juggling SolrCore’s.

Hopefully this was a fun glimpse at some of the improvements. There is a lot left to look into.