Solr Search Relevance Testing

Many people focus purely on the speed of search, often neglecting the quality of the results produced by the system. In most cases, people test out some small set of queries, eyeball the top five or ten and then declare the system good enough. In other cases, they have a suite of test queries to run, but they are at a loss for how to fix any issues that arise.

To solve this relevance problems takes a systematic approach, a set of useful tools and a dose of patience. This article will outline several approaches and tools. The patience part will come from knowing the problem is being looked at in a pragmatic way that will lead to a solution instead of a dead end.

At some point during the construction, testing or deployment of a search application, every developer will encounter the “relevance problem”. Simply put, some person using the system will enter their favorite query (yes, everyone has one) into the system and the results will be, to put it nicely, less than stellar. They are now demanding that it gets fixed.

For example, I was on-site with a customer training their developers and assessing their system when I suggested a query having to do with my hometown of Buffalo, MN (see, I told you we all have favorite queries), knowing that information should be in their system. As you can guess, the desired result did not come up and we then had to work through some of the techniques I’m about to describe. For closure’s sake, the problem was traced back to bad data generated during data import and was fixed by the next day.

You might wonder how these bad results can happen, since the system was tested over and over with a lot of queries (likely your “favorites”) and the results were always beautiful. You might also wonder if the whole application is bad and how it can be fixed. How do you know if fixing that one query is going to break everything else?

Not to worry: the relevance problem can be addressed head-on and in a systematic way that will answer all of these questions. Specifically, this article will layout how to test a search application’s relevance performance and determine the cause of the problem. In a second article, I’ll dicsuss techniques for fixing relevance issues.

To get started, a few definitions are in order. First and foremost, relevance in the context of search measures how well a set of results meets the need of the user querying the system. Unfortunately, definitions of relevance always have an element of subjectivity in them due to user interaction. Thus, relevance testing should always be addressed across as many users as possible so that the subjectivity of any single user will be replaced by the objectivity of the group as a whole. Furthermore, relevance testing must always be about the overall net gain in the system versus any single improvement for one query or one user.

To help further define relevance, two more concepts are helpful:

- Precision is the percentage of documents in the returned results that are relevant.

- Recall is the percentage of relevant results returned out of all relevant results in the system. Obtaining perfect recall is trivial: simply return every document in the collection for every query.

Given precision and recall definitions, we can now quantify relevance across users and queries for a collection. In these terms, a perfect system would have 100% precision and 100% recall for every user and every query. In other words, it would retrieve all the relevant documents and nothing else. In practical terms, when talking about precision and recall in real systems, it is common to focus on precision and recall at a certain number of results, the most common (and useful) being ten results.

Practically speaking, there are many factors that go into relevance beyond just the definitions. In designing your system for relevance, consider the following:

- Is it better to be accurate or to return as many feasible matches as possible?

- How important is it to avoid embarrassing results?

- What factors besides pure keyword matches matter? For instance, do users want results for items that are close to them physically (aka “local” search) or do they prefer newer results over older? In the former case, adding spatial search can help, while in the latter sorting by date will help.

With these factors in mind, you can then tailor the testing and resulting work towards achieving those goals.

Now that I’ve defined relevance and some ways of measuring it, it is time to learn how to determine it in an actual application. After that, I’ll discuss how to find out where relevance issues are coming from and I’ll walk through some common examples of relevance debugging.

Determining Relevance Quality

There are many ways of determining relevance in a search application, ranging from low cost to expensive. Some are better than others, but none are absolute. To determine relevance, you need at least three things:

- A collection of documents

- A set of queries

- A set of relevance judgments

The first item in the list is the easiest, while obtaining judgments is often difficult since it is the most time consuming. Relevance Testing Options discusses some of the ways these three items get combined to produce a relevance assessment.

Table 1. Relevance Testing Options

| Name | Description | Pros | Cons |

|---|---|---|---|

| Ad-hoc | Developers, QA, business people dream up queries, enter them into the system and eyeball the results. Feedback is usually broad and non-specific, as in “this query was good” or “the results suck” | Low initial cost, low startup costs, gives a general overall sense of the system. Better than nothing? | Not repeatable and not reliable. Doesn’t produce precision/recall information. |

| Focus Groups | Gather a set of real users and have them interact with the system over some period of time. Log everything they do and explicitly ask them for feedback. | Low cost, if done online. Feedback and logs are quite useful, especially if users feel invested in the process. May be possible to produce precision/recall info if users provide feedback. | The results may not be extrapolated to broader audience, depending on how well the users represent your target audience. |

| TREC and other Public Evaluations | Run a relevance study using a set of queries, documents and relevance judgments created by a third party group. The most popular instance of this is the Text Retrieval Evaluation Conference (TREC) held by the National Institute of Standards and Technologies (NIST). | Relatively easy to use, test and compare to previous runs. Completely repeatable. Good as a part of a larger evaluation, but should not be the sole means of testing. | The collection is not free or open. Doing well in TREC doesn’t necessarily translate to doing well in real-life. |

| Online Ratings | Let users rate documents using a numeric or “star” system | Similar to clickthrough tracking, but explicitly marks documents as being relevant and thus removes doubt about users’ intent. Can be fed back into the system to affect scoring. | Users can game the system, but over time the collective should outweigh any specific user input. |

| Log Analysis on a Beta Production Site | Deploy a live system to a large audience and let them kick the tires. You should only do this once you are reasonably confident things work well. It is imperative to have good logging in place first, which means thinking about the things your want to log, such as queries, results, clickthrough rates, etc. Finally, invite feedback from your users. Mix these factors together and pay attention to trends and red flags. | What’s better than real users? This approach is essentially a scaled up version of the Focus Group. | What’s worse than real users? It’s scary and it is expensive, but at some point you have to do it anyway, right? Also, it is difficult to do in non-hosted environments. |

| A/B Testing | Assign a percentage of users queries to go to one search system, while the other percentage searches an alternate system. After the appropriate period of time, evaluate the choices made by those in the A group and those in the B group to see if one group had better results than the other. If feasible, have the users in each group rate the results. | Combine with log analysis to get a good picture of what people prefer. | Requires setting up and maintaining two systems in production. Difficult to do in non-server based applications. |

| Empirical Testing | Given a set of queries from beta testing or an existing system, select the top X queries in terms of volume and Y randomly selected queries not in the top X. Have your QA or business team execute the queries against the system and rate the top five or ten results as relevant, somewhat relevant and not relevant. Alternatively, you could ask them to make binary decisions (relevant or not) or add in a fourth option: embarrassing. X is usually between 25-50 and Y is often between 10 and 20, but you should select based on available time and resources. | Real queries, real documents, real results. Laser-like focus on those queries that are most important to your system, without neglecting the others. Also, allows unhappy users to directly, and quantifiably show how the system doesn’t meet their expectations. | Time consuming depending on your choice of X and Y. Judgments are still subjective and may not extrapolate to others. |

In reality, you will likely want to adopt several of the approaches outlined in the table above. Of these evaluation methods, the ones that will produce the highest quality of results are beta testing with log analysis, A/B testing with ratings and empirical testing, since they are direct tests of your application and alternates. Online ratings can also be quite useful, but require you to be in production for some period of time first. I’ll cover log analysis and empirical testing in greater details in the next two sections. Ultimately, you will want a process that is repeatable, verifiable and feasible for your team to sustain over the life of the application.

TREC-style testing is often good for comparing two different engines or underlying search algorithms. It is also a good starting point, since it is relatively cheap to get up and running. Oftentimes it is just as useful to read the associated TREC literature and think about how others have solved similar problems. Last, TREC testing gives you nice, easy to understand numbers summarizing the results. Avoid, however, the tendency to spend a lot of time tuning your system for TREC. It is often possible to eke out marginal improvements in TREC at the cost of system complexity and real-world need.

Log Analysis

Log analysis involves parsing through your logs and tracking queries, number of results, results clickthrough rate, pagination requests, and, if possible, time spent on each page. All of these should be done both in aggregate and on a per user basis. For the queries, you should also track the number of queries per result (pay special attention to those that returned no results), as well as the most popular queries and the most popular terms and phrases. Then, for some sampling of queries (likely the top 50 and some other random queries, as in empirical testing), correlate the number of results that were clicked on and the time spent on those results (if it is discernable.) Similarly, account for how many times people had to click to subsequent pages, etc. The goal of all this is to try and get a sense of how successful users are at finding what they need. If they are paging a lot, or clicking a lot of results but not spending time reading them, chances are they are not finding what they need.

Additionally, analyzing queries to correlate similar queries can be helpful. For instance, if a user enters one or two keywords and then modifies that query a few times, chances are they are not finding what they need or they are getting too many results. It is also useful to track their clickthrough rate on other display features like facets, spelling corrections, auto suggest selections, related search suggestions and “more like this” requests.

Once you have all this information, you can store it and then compare it with other periods in you logs to see if you notice useful trends. For instance, are users spending more time on results or less? Are there fewer zero-result queries and fewer no-clickthrough queries? Analysis should also lead you to areas to focus on that are underperforming, or at least you suspect are underperforming, as in the zero-result queries or the no-click queries. Keep in mind, though, that a zero-result query may reflect the lack of relevant information in the collection, or that it is entirely possible to get the answer one is looking for solely from the titles and snippets of the search results without clicking on anything. Finally, keep in mind that log analysis is lacking in actual relevance assessment. At best, you have estimates of what your users think are relevant. Log analysis will undoubtedly yield a lot of data over time and it can quickly take on a life of it’s own, so don’t get stuck in the details for very long.

Empirical Testing

Similar to log analysis, empirical testing uses real data and real user queries, but goes one step further and adds in real relevance judgments. In most cases, empirical testing should be done with at least the top 50 most popular queries and 20 or so random queries. If your application receives a particularly wide distribution of queries, you may wish to increase the number of random queries selected. Once the queries have been selected, at least one person (ideally two or three, all of whom are non-developers) should run the queries through the system. For each query, they should rank the top five or ten results as either relevant or not relevant. In many cases, it may make more sense to rank them as one of: relevant, somewhat relevant, not relevant, embarrassing, as relevance is seldom black and white. The goal of all this is to maximize relevant while minimizing embarrassing and not relevant.

Once the ranking is done, you can then go through the results and identify where you did well and where you need improvement, including generating precision/recall information, as in TREC-style testing. In some cases, you may learn as much from where you did well as you do from where you did poorly. Finally, do this sort of empirical testing as often as you can stand, or at least when you make significant changes to the system or notice new trends in your top queries. Over time and with your improvements, you can begin to use the judgments as a semi-automated regression test.

With any of the methods outlined above, you will end up with a set of queries that you feel underperform. From these, you can begin the debugging process, which I’ll walk through in the next section.

Debugging Relevance Issues

Debugging relevance issues is much like Edison’s genius equation: 1% inspiration and 99% perspiration. Debugging search systems is time consuming, yet necessary. It can be both frustrating and enjoyable within minutes of each other. If done right, it should lead to better results and a deeper understanding of how your system works.

Given one or more queries determined by the methods outlined above, start by doing an assessment of the queries. In this assessment, take note of misspellings, jargon, abbreviations, case sensitivity mismatches and the potential use of more common synonyms. Also track which queries returned no results and set them aside. If you have judgments, run the queries and see if you agree with the judgments or not. If you don’t have judgments, record your own. This isn’t to second guess the judge so much as to get a better feel for where to spend your time or apply any system knowledge you might have that the judge doesn’t. Take notes on your first impressions of the queries and their results. Prioritize your work based on how popular the query is in terms of query volume. While it is good to investigate problems with infrequent queries, spend most of your time on the bigger ones first. As you gain experience, this preliminary assessment will give you a good idea of where to dig deeper and where to skip.

For all the queries where spelling or synonyms (jargon, abbreviations, etc.) are an issue, try out some alternate queries to see if they produce better results (better yet, ask your testers to do so) and keep a list of what helped and what didn’t, as it will be used later when fixing the problems. You might also try systematically working through a set of queries and try removing words, adding words, changing operators and changing term boosts.

Next, take a look at how the queries were parsed. What types of queries were created? Are they searching the expected Fields? Are the right terms being boosted? Do phrase queries have an associated slop? Also, as a sanity check, make sure your query time analysis matches your indexing analysis. (I’ll discuss a technique for doing this later.) For developers that create their own queries programmatically, analysis mismatch is a subtle error that can be hard to track down. Additionally, look at what operators are used and whether any term groupings are incorrect. Finally, is the query overly complex? At times, it may seem beneficial to pile on more and more query “features” to cover every little corner case, but this doesn’t always yield the expected results as it is sometimes hard to predict the effects and it will make debugging much more complex. Instead, carefully analyze what the goal is of adding the new feature and then run it through your tests to see the effect.

Note

In Solr the final Query can be obtained by adding &debugQuery=true to your input query. In Lucene, you will have to log the final Query to see it.

Examining Explanations

If you know lower-ranked results are better than higher-ranked results or if you’re just curious about why something scored the way it did, then it is time to look at Lucene and Solr’s explain functionality to try to understand why Lucene is scoring it lower. In Lucene, explain is a method on the Searcher with the following signature:

public Explanation explain(Query query, int doc) throws IOException

Simply pass in the Query and Lucene’s internal document id (returned by TopDocs/Hits) and display/access the Explanation results. In Solr, add on the debugQuery=true parameter to get back a bunch of information about the search, including explanations for all the results.

To see explanations in action, use the Solr tutorial example and data, and then try the query

http://localhost:8983/solr/select/?q=GB&version=2.2&start=0&rows=10&indent=on&debugQuery=true&fl=id,score.

In my case, Solr returns the following six results:

<result name=”response” numFound=”6″ start=”0″ maxScore=”0.18314168″>

<doc>

<float name=”score”>0.18314168</float>

<str name=”id”>6H500F0</str>

</doc>

<doc>

<float name=”score”>0.15540087</float>

<str name=”id”>VS1GB400C3</str>

</doc>

<doc>

<float name=”score”>0.14651334</float>

<str name=”id”>TWINX2048-3200PRO</str>

</doc>

<doc>

<float name=”score”>0.12950073</float>

<str name=”id”>VDBDB1A16</str>

</doc>

<doc>

<float name=”score”>0.103600584</float>

<str name=”id”>SP2514N</str>

</doc>

<doc>

<float name=”score”>0.077700436</float>

<str name=”id”>MA147LL/A</str>

</doc>

</result>

Now, let’s say I think the sixth result is better than the first (note, this is purely an example, I would never worry about result ordering within the top ten unless requirements explicitly stated an example must occur at a particular spot for editorial reasons.) Seeing that the score of the sixth item is 0.07 (rounded) compared to 0.18 for the first, I would look at the explanations of the two to see the reasoning behind the scores. In this case, the explanation for result one is:

<str name=”6H500F0″>

0.18314168 = (MATCH) sum of:

0.18314168 = (MATCH) weight(text:gb in 1), product of:

0.35845062 = queryWeight(text:gb), product of:

2.3121865 = idf(docFreq=6, numDocs=26)

0.15502669 = queryNorm

0.5109258 = (MATCH) fieldWeight(text:gb in 1), product of:

1.4142135 = tf(termFreq(text:gb)=2)

2.3121865 = idf(docFreq=6, numDocs=26)

0.15625 = fieldNorm(field=text, doc=1)

</str>

while the sixth result yields:

<str name=”MA147LL/A”>

0.07770044 = (MATCH) sum of:

0.07770044 = (MATCH) weight(text:gb in 4), product of:

0.35845062 = queryWeight(text:gb), product of:

2.3121865 = idf(docFreq=6, numDocs=26)

0.15502669 = queryNorm

0.21676749 = (MATCH) fieldWeight(text:gb in 4), product of:

1.0 = tf(termFreq(text:gb)=1)

2.3121865 = idf(docFreq=6, numDocs=26)

0.09375 = fieldNorm(field=text, doc=4)

</str>

An examination of these results shows that most values are the same, with the exception of the TF (term frequency) and fieldNorm values. The first document has “gb” occuring twice in the “text” Field giving a TF value of 1.414… (2 0.5), while the other document only has “gb” occuring once, yielding a TF equal to 1. The fieldNorm value is a little bit trickier to interpret since it rolls together several factors: Document boost, Field boost and length normalization. In order to compare this value, I went back to each of the documents and confirmed that neither of them were boosted during indexing, implying that the sole factor in the fieldNorm is due to the length normalization.

Note

Length normalization is a common search mechanism for balancing out the fact that longer documents will more than likely have more mentions of a word than shorter documents, but that doesn’t mean they are anymore relevant than the shorter document. In fact, all else being equal, most people would rather read a short document than a long document. The default calculation of Length Normalization is:

len norm = 1/(num terms) 0.5

In this case, the first document is shorter, thus yielding a larger fieldNorm value. Combining the larger fieldNorm with the larger TF value leads to the first document ranking higher. If I really wanted to make the sixth result better, I could try one or more of the ideas I discuss in my article on findability.

As queries become more complex, so do the explanations. The key to dealing with the complexity is to focus on those factors that are different. Once a sense of the differences are determined, then you should proceed to take a deeper look at the those factors involved. One of the most common things to look at is the analysis of a document, which is covered in the tools section below.

Finding Content Issues

Many relevance issues are solved simply by double checking that input documents were indexed and that the indexed documents have the correct Fields and analysis results. Also double check that queries are searching the expected Fields. For existing indexes, you may also wish to iterate over your index and take an inventory of the existing Fields. While there is nothing wrong with a Document not having a Field, it may be a tipoff to something wrong when a lot of Documents are missing a Field that you commonly search.

Other Debugging Challenges

While explanations and other debugging tools all can help figure out relevance challenges, there are many subtle insights that come solely by working through your specific application. In particular, dealing with queries that return zero results can be especially problematic, since it isn’t clear whether the issues are due to problems in the engine (analysis, query construction, etc.) or if there truly are no relevant documents available. To work through zero result issues, create alternate forms of the query by adding synonyms, changing spellings or trying other word level modifications. It may also be helpful to look in the logs to see what other queries the user submitted around the time of the bad query and try those, especially if they resulted in clickthroughs. Next, I might try to determine if there are facets or other navigational aids that might come close to finding relevant documents. For instance, if the query is looking for a “flux capacitor” and it isn’t finding any results, perhaps the facets contain something like “time machine components” which can then be explored to see if this much smaller set of documents does contain a relevant document. If it does, then the documents can be assessed as to why they don’t match, as discussed above.

Tools for Debugging

There are several helpful tools for debugging relevance problems, some of which are outlined in the sections below.

TREC Tools

When working with TREC, there are tools available for running experiments (see Lucene’s contrib/benchmark code) and checking the evaluations. In particular, the trec_eval program has been around for quite some time and provides information about precision and recall for TREC-style experiments.

Analysis Tools

In many cases, search problems can be solved through analysis techniques. To understand analysis problems better, it is worthwhile to make it easy to spit out the results of analysis. In Lucene, this can be done through some simple code, such as:

Analyzer analyzer = new StandardAnalyzer();//replace with your analyzer

TokenStream stream = analyzer.tokenStream("foo", new StringReader("Test String Goes here"));

Token token = new Token();

while ((token = tokenStream.next(token)) != null) {

System.out.println("Token: " + token);

}

Obviously, you will want to make this generic to handle any input string and a variety of Analyzers.

While the code above is pretty simple, Solr makes examining analysis output dead-simple. Additionally, even if you use nothing else from Solr, using it to debug analysis will save you a lot of time.

To get to Solr’s Analysis tool, get Solr running and point a browser at http://localhost:8983/solr/admin/analysis.jsp?highlight=on. You should see something like that shown in Solr Analysis Screenshot:

Figure 1. Solr Analysis Screenshot

An example of Solr’s Analysis tool

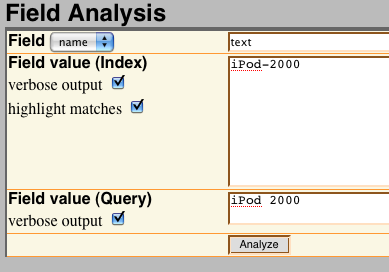

From the admin screen, both documents and queries can be entered and the results can be analyzed. Checking the “highlight matches” option will show where, potentially, a query matches a document. For example, if I’m exploring how Solr’s WordDelimiterFilter affects queries, I might enter the options in the figure below. Note, the WordDelimiterFilter is used to split up words based on transitions within the word like hyphens, case change, alpha-numeric transitions and other things.

Figure 2. WordDelimiterFilter Example

An example of analysis text with intra-word delimiters such as hyphens and numbers

Submitting the text in the WordDelimiterFilter Example, runs both the query and the text through the “text” Field. The text Field in the Solr schema in the example is configured as in the Solr Tutorial:

<fieldType name=”text” class=”solr.TextField” positionIncrementGap=”100″>

<analyzer type=”index”>

<tokenizer class=”solr.WhitespaceTokenizerFactory”/>

<filter class=”solr.StopFilterFactory” ignoreCase=”true”

words=”stopwords.txt” enablePositionIncrements=”true”/>

<filter class=”solr.WordDelimiterFilterFactory” generateWordParts=”1″

generateNumberParts=”1″ catenateWords=”1″ catenateNumbers=”1″

catenateAll=”0″ splitOnCaseChange=”1″/>

<filter class=”solr.LowerCaseFilterFactory”/>

<filter class=”solr.EnglishPorterFilterFactory” protected=”protwords.txt”/>

<filter class=”solr.RemoveDuplicatesTokenFilterFactory”/>

</analyzer>

<analyzer type=”query”>

<tokenizer class=”solr.WhitespaceTokenizerFactory”/>

<filter class=”solr.SynonymFilterFactory” synonyms=”synonyms.txt”

ignoreCase=”true” expand=”true”/>

<filter class=”solr.StopFilterFactory” ignoreCase=”true”

words=”stopwords.txt” enablePositionIncrements=”true” />

<filter class=”solr.WordDelimiterFilterFactory” generateWordParts=”1″

generateNumberParts=”1″ catenateWords=”0″ catenateNumbers=”0″

catenateAll=”0″ splitOnCaseChange=”1″/>

<filter class=”solr.LowerCaseFilterFactory”/>

<filter class=”solr.EnglishPorterFilterFactory” protected=”protwords.txt”/>

<filter class=”solr.RemoveDuplicatesTokenFilterFactory”/>

</analyzer>

</fieldType>

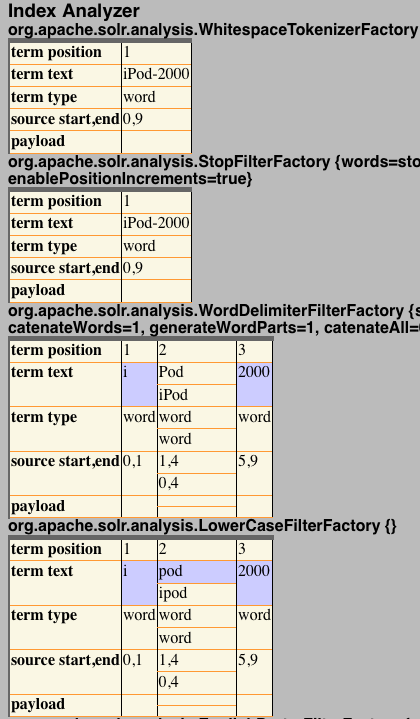

The partial results of running this can be seen in Results of Sample Analysis.

Figure 3. Results of Sample Analysis

The partial results of submitting an example query and document to Solr’s analysis tool.

In the results figure, notice the purple highlighted areas. These areas signify where the query terms and the document terms align, thus producing an analysis match. Thus, even though I entered two similar, but different, terms, I was able to get a match.

Doing this kind of analysis can be tedious if you have a lot of documents, but it is quite useful in well focused cases. If you suspect you have an analysis problem, then spend the time here, otherwise move on and try some of the other ideas and tools.

Using Luke for Debugging

Luke, a tool written by Lucid advisor Andrzej Bialecki, is a general purpose debugging tool for Lucene indexes that allows you to easily inspect the terms and documents in an index, as well as run sample queries and examine other Lucene parts.

Often times, especially dealing with documents in other languages, a quick look at Luke will show that documents were not indexed correctly or that documents can be found by altering the input query or trying a different Analyzer.

Luke’s summary information is also quite helpful. It will let you know if you have the expected number of documents in your index as well as how many unique terms were indexed. If either of these are off, then consider double checking your indexing process.

Dealing with Precision/Recall Conflicts

As you work through relevance issues and check your improvements by retrying your empirical tests (TREC or your own), you will likely run into a few problems. First, changes designed to improve precision, like using the AND operator more, will often lead to a drop in recall. Likewise, changes to improve recall will often cause a drop in precision. In these cases, try to identify specific queries that were changed for better or worse (trec_eval can print out individual query performance) and see if there is any obvious compromise to be had. In the end, in most situations, precision is more important than recall, since users seldom go beyond the first or second page of results. However, go back to your system’s principal relevance goals to determine the tradeoff to choose.

Conclusions

While there are a large number of things that can go wrong with relevance, most are fairly easily identifiable. In fact, many are directly related to bad data going in, or not searching the proper Field in the proper way (or not searching the Field at all). Most of these issues will be quickly addressed by challenging the assumption that it is correct and actually doing the work to verify it is, indeed, correct.

Additionally, be sure to take a systematic approach to testing and debugging. Particularly when batch testing a large number of queries, experiments tend to run together. Take good notes on each experiment and resist the temptation to cut and paste names and descriptions between runs. If possible, use a database or a version control system to help track experiments over time.

Finally, stay focused on relevance as a macro problem and not a micro problem and a better experience for all involved should ensue.

Resources

Use the following resources to learn more about relevance testing and other related topics

- Try the Solr Tutorial.

- Learn more about Lucid’s training offerings

- Download Luke for debugging Lucene.

- Learn more about the theory of Relevance.

- Learn more about Lucene performance, including quality in my ApacheCon Europe 2007 talk.

- Read the Solr Tutorial.

- Use the TREC Eval program for helping asses precision and recall in TREC-style experiments.

- Read full attribution for Thomas Edison’s genius quotation.

- Track all of the latest research in relevance and information retrieval at SIGIR