Coming Soon to Solr: Efficient Cursor Based Iteration

(UPDATE: 2013-12-18 I’ve posted some new graphs below based on the revised, non-strawman, patch)

One thing Solr has never been very efficient at is a problem that people refer to as “Deep Paging”. Simply put: asking Solr for “Page #1” of a search result is very efficient, so is asking for Page #2, and Page #3, etc… for small page numbers; but as the page numbers get bigger and bigger, Solr has to work harder (and use more RAM) in order to know what results to give you.

I’ve been working on a solution to this problem, but before talking about that, let’s review….

Why is deep paging hard?

The key to understanding why so much RAM and time is required for “Deep Paging” is to remember that as far as client requests go, Solr is basically stateless. The way a client asks for “pages” of search results is by telling Solr how many results they want to get on a page (using the rows parameter) and what position in the overall sorted list of documents the client wants the current page to start at (using the start parameter).

So for a client that wants 50 results per page, page #1 is requested using start=0&rows=50. Page #2 is start=50&rows=50, page #3 is start=100&rows=50, etc…. But in order for Solr to know which 50 docs to return starting at an arbitrary point N, it needs to build up an internal queue of the first N+50 sorted documents matching the query, so that it can then throw away the first N docs, and return the remaining 50. This means the amount of memory needed to return paginated results grows linearly with the increase in the start param. In the case of SolrCloud, the problem gets even worse, because the N+50 top documents need to be collected on every shard, and the sort values from every shard need to be streamed over the network to the coordination node (the one that received the initial request from the end client) to merge them.

For typical applications displaying search results to a human user, this tends to not be much of an issue since most users don’t care about drilling down past the first handful of pages of search results — but for automated systems that want to crunch data about all of the documents matching a query, it can be seriously prohibitive.

Is There A Workaround?

One workaround to make fetching full result lists feasible that has been available to Solr clients for quite a while involves a couple of caveats:

- results must be sorted deterministically by document fields — no sorting by

score- Clients can still request

scorein theflthey just can’t sort on score

- Clients can still request

- sort must include at least one field that is unique per document – typically just the uniqueKey field

- All fields being sorted on must be stored and included in the

fl

With these constraints, clients can then request “Deep Pages” of results by building up an fq (filter query) that uses the sort field values from the last document retrieved in range queries. As a trivial example, consider the following query params:

q=foo&fl=id,name,score&sort=id asc&start=0&rows=500

Assuming id is unique for every document, then if the last document returned by that query has an id of AAA123 the next “page” or results could be fetched by modifying the query to include an fq on the id field:

q=foo&fl=id,name,score&sort=id asc&fq=id:{AAA123 TO *]&start=0&rows=500

This same process can be used with more sorts on multiple fields, but the fq that must be specified gets significantly more complicated. If sorting on price desc, id asc for example, the filter would need to be something like:

fq=(price:[* TO $LAST_DOC_PRICE} (+price:$LAST_DOC_PRICE +id:{$LAST_DOC_ID TO *]))

While feasible for a lot of applications, this is fairly complicated for clients to get right, and it isn’t much use at all for situations like “I want to do some processing on the top 20% of results sorted by score” because it would require the client to fetch all 100% of the results (sorted by something like the uniqueKey), and then doing the sort by score on the client to find the highest scoring 20%.

Let’s Build A Simpler API

Starting with Lucene 4.0, a new method was introduced to Lucene’s IndexSearcher class that implements the same type of filtering logic described above at a much lower level. The method caller only has to keep track of the information in the last FieldDoc returned by a previous search, which already encapsulates all of the Sort values for that document — including score (even if the sort fields are not stored).

In SOLR-5463 I’ve started the process of leveraging this type of logic in Solr. All of the patches I’ve posted so far contain a straw man implementation that tries to modify the existing search logic in Solr as little as possible, and as such doesn’t take advantage of any of the existing lower level searchAfter code (yet). The goal of this straw man implementation is to help build up a good test suite of the new functionality, and to solve some of the questions of what the client API should look like.

A notable question about how the Solr client API should work is how the “last FieldDoc” concept from the low-level Lucene API should map into something Solr clients can deal with easily. In a Java application that uses Lucene’s IndexSearcher methods directly, hanging on to a FieldDoc object from a previous search to re-use in a subsequent method call is no problem. In Solr however, we want to be able to provide an API that allows Solr to remain stateless to the sequence of requests — in a Replicated or SolrCloud system the Solr server receiving the “page #N” request might be completely different from the server that processed “page #N-1”. The solution developed so far is to have Solr encode the sort values from the “last” document returned into a single Base64 “cursor totem” (we’re still working on a better name) String that is returned to the client. Clients can then pass the totem string back to Solr to fetch the next page of results, at which point they’ll get a new totem string for the next request, etc….

In essence, the classic method of paging through results can be thought of as:

while (still want more results) {

new_page = get(params)

add new_page to cumulative_results

params[start] += params[rows]

}

While the new cursor based approach is:

while (still want more results) {

new_page = get(params)

add new_page to cumulative_results

params[totem] = new_page[next totem]

}

Does It Work?

Test results and experiments so far have demonstrated that this new approach should definitely work well. Even though the straw man implementation is extremely inefficient in several ways (short cuts taken during development since I expect to throw the code out) it’s still much faster and uses much less RAM then normal pagination.

As a quick demonstration of the performance improvements, I ran some simple tests on my laptop using some synthetic indexes I built up containing one million random documents and some test scripts to walk the full result sets of a few queries that matched a large number of documents. I’ve included the full details of the methodology, including all scripts, on github, but the basic process in all of these tests was:

- Build the index against the example configs

- Shutdown Solr

- For each of my test scripts:

classic_deep_paging.plandcursor_deep_paging.pl:- Start up Solr, wait for firstSearcher to warm up

- Run the script 3 times: Each run producing 2 files: time per page request, and the docs+score returned.

- Shutdown Solr

- Diff the docs returned by all 6 runs to ensure correctness

- Merge the 3 time result files for each script to compute the mean and stddev

- Graph the mean and stddev for each script

Before looking at the results, I’d like to remind everyone of a few important caveats:

- I ran these tests on my laptop, while doing many other tasks

- There was only the one client providing query load to Solr during the test – In real world usage with many concurrent clients, classic “deep paging” would usually cause JVM to run out of memory, but with the straw man approach the memory usage should be the same as a normal

start=0Page #1 request - The data in these tests was random and very synthetic, it doesn’t represent any sort of typical term distribution that would affect scores, or typical sort value distribution which might affect general sort performance in a real world application.

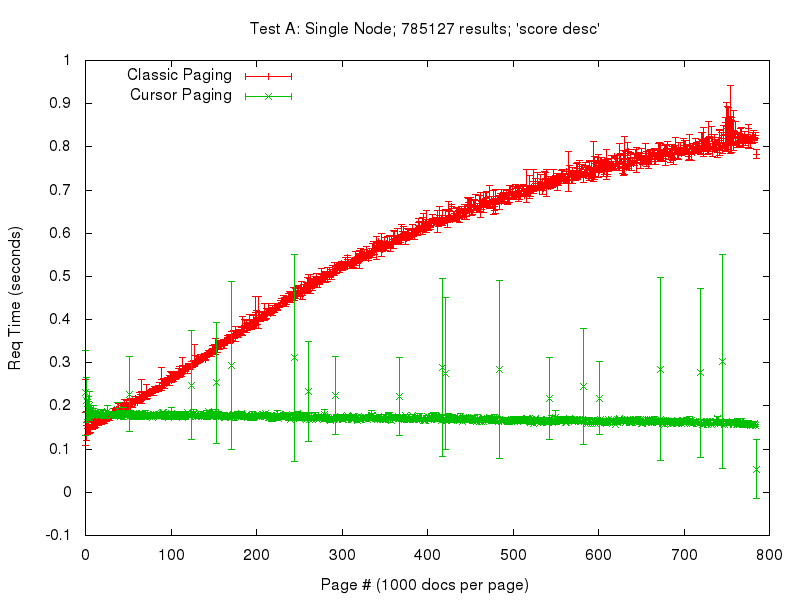

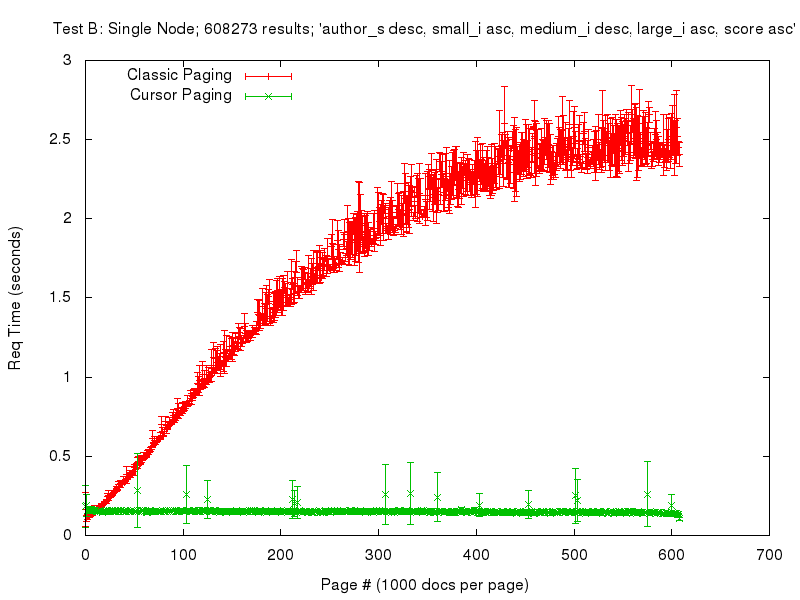

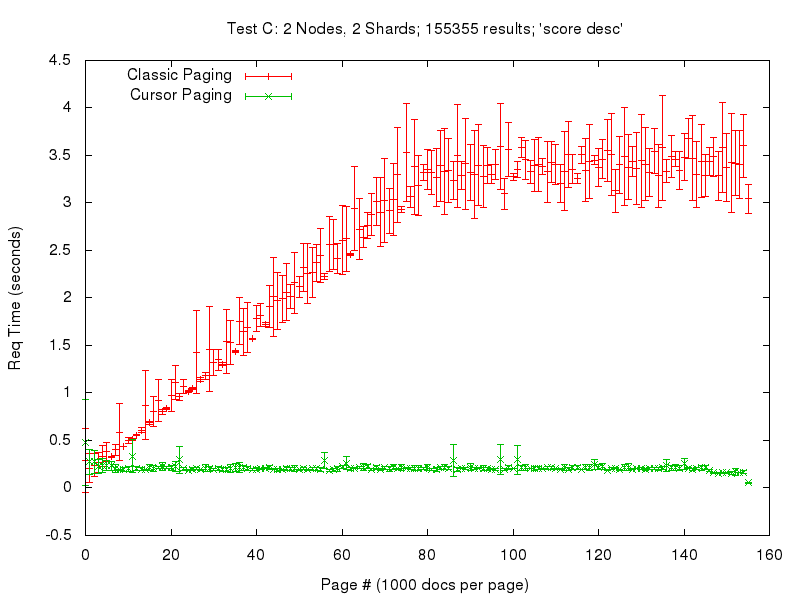

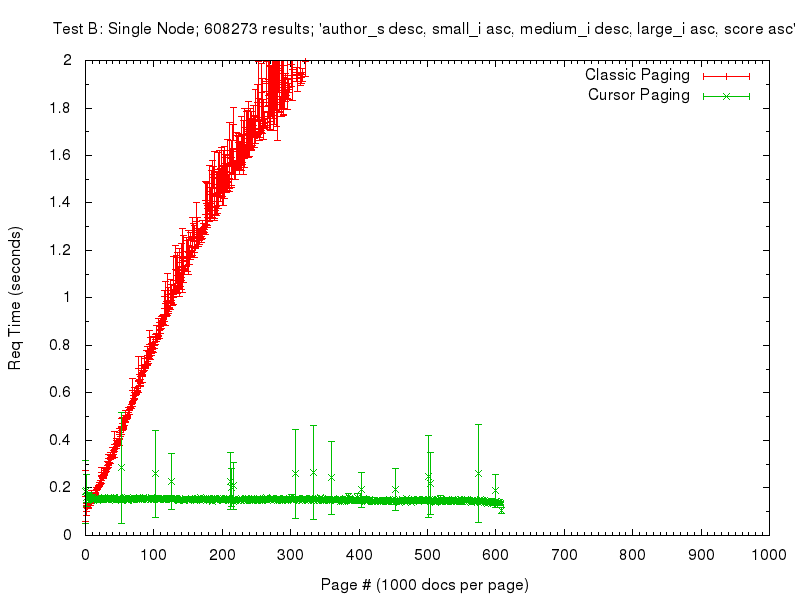

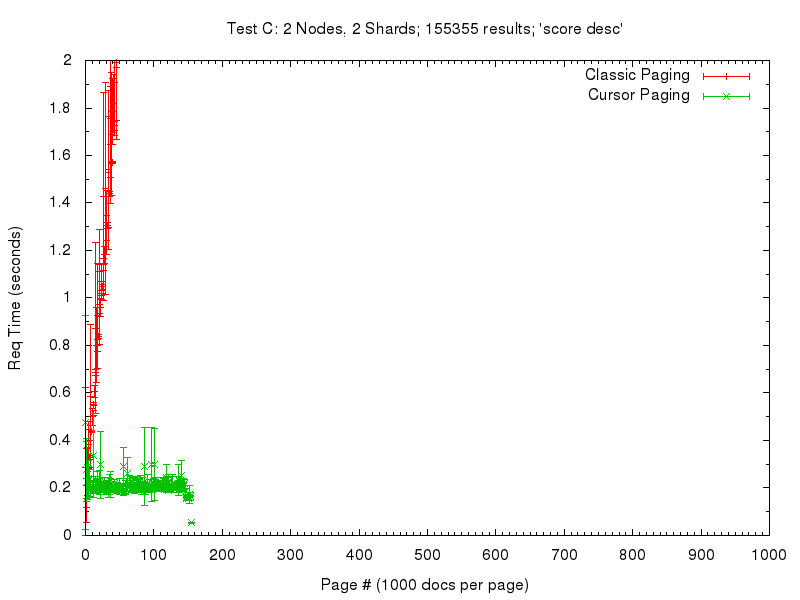

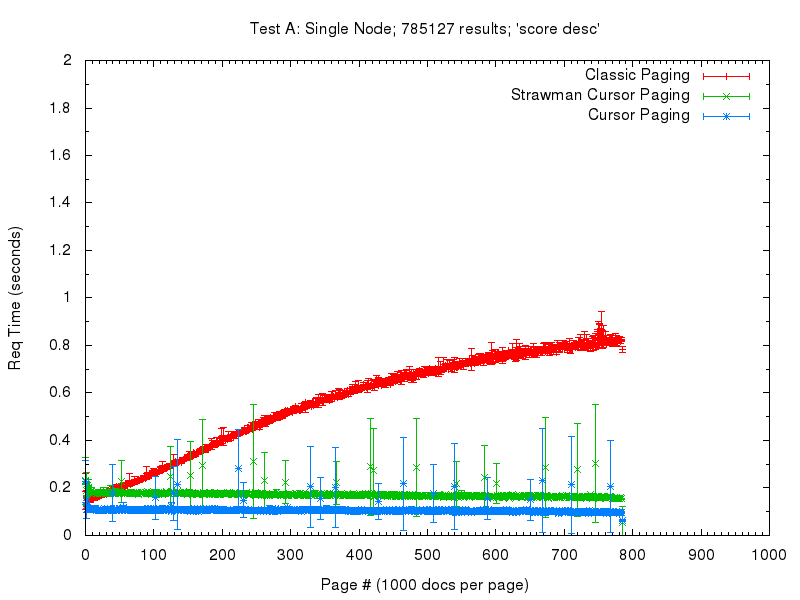

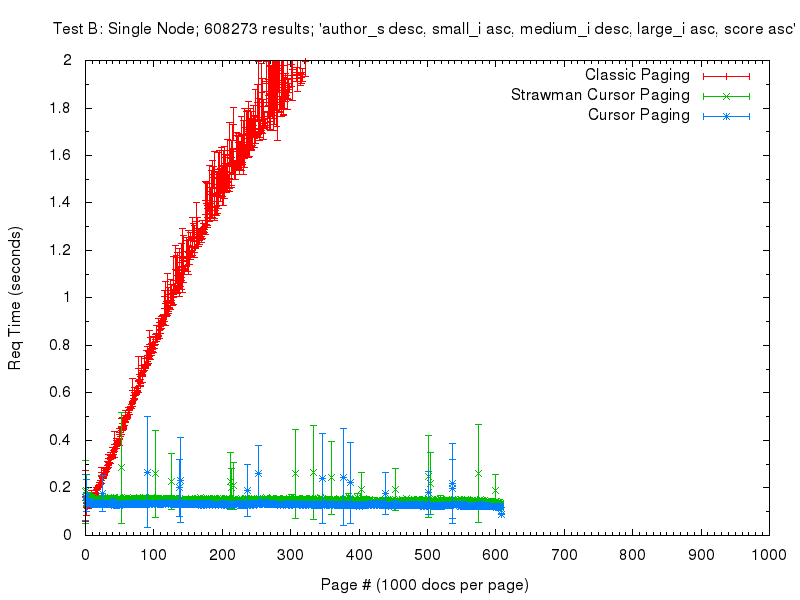

Even with those factors in mind, we can see some pretty obvious patterns in the results below. Please note that the scales on these first three graphs are not the same….

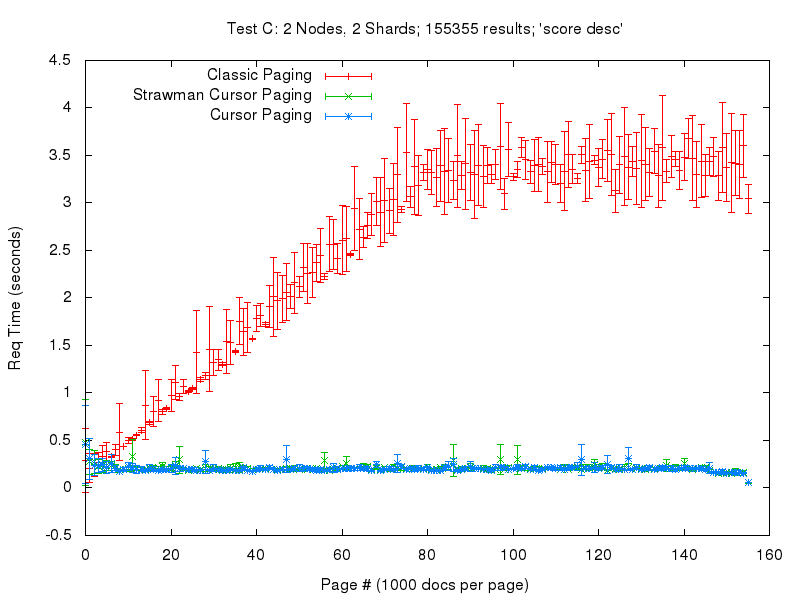

Not only is the linear increase in classic pagination response time evident, but you can see that the slope of that linear growth is heavily influenced based on the complexity of the sort. I can’t explain why this growth seems to level off — but at the point where it does level off the response times are already too extreme to be useful. In the case of a simple 2 shard SolrCloud query (Test C) even a basic sort by score has response times that spike above 2 seconds once we page up to ~40K documents. (That’s why I picked a query for Test C that only matched ~150K total documents – I didn’t want to spend all day just running the SolrCloud deep paging test.)

By comparison, the new cursor based paging approach starts out slightly slower in the response time per page, but stays relatively constant. The fact that it is slower then classic paging for low page numbers is almost certainly an artifact of the straw man implementation — because of some laziness in my patch, it currently sends at least twice as much data to the client application as it needs to. The large amount of variance in the cursor based response times is admittedly surprising to me. I suspect that may also be an artifact of the straw man code, and will certainly be something to look for once the patch is finalized.

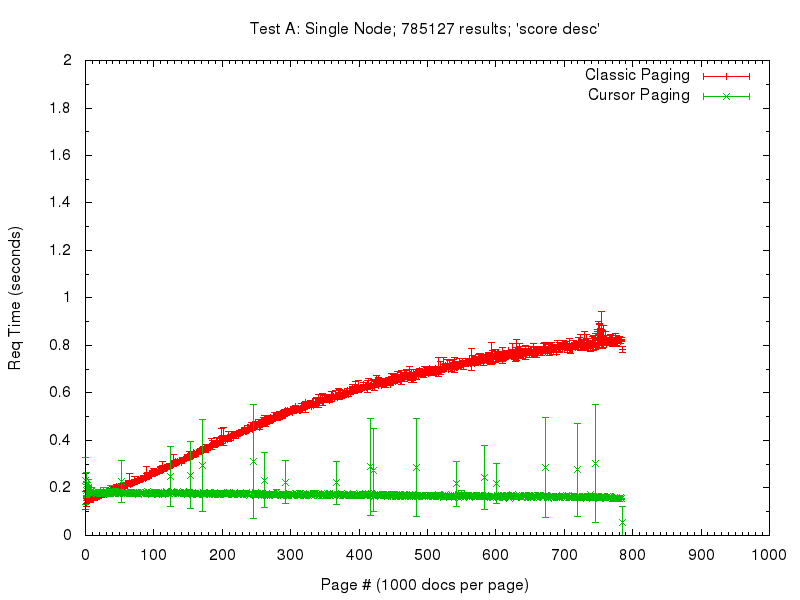

In order to make “side by side” comparisons of each test easier, I also plotted the same data again using fixed scales on both the X & Y axis in all three graphs. This makes it trivial to see how much the response time of classic deep paging can change as the sorting gets more complicated — but in the case of the new cursor logic, the response time stays consistent….

I think the evidence is pretty clear that we’re on the right track towards a much better API for clients that want to fetch large sets of results.

Update: Non-Strawman Performance

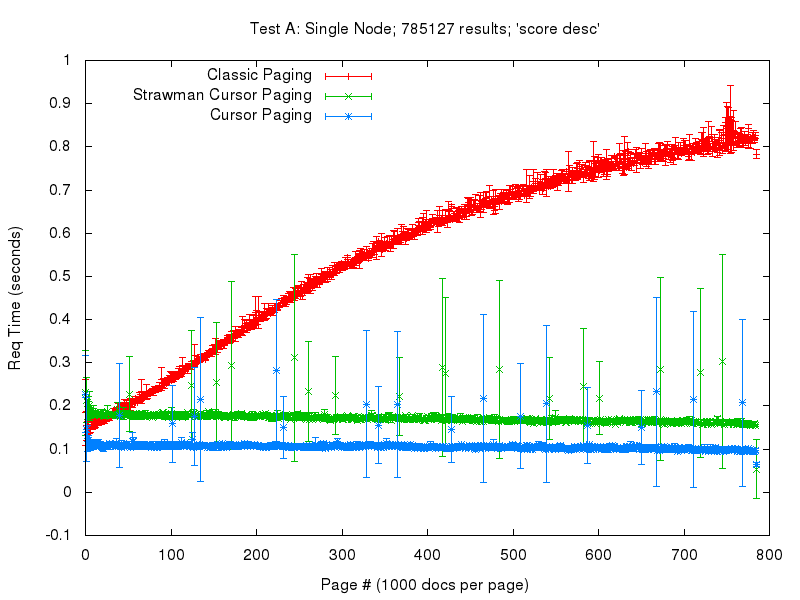

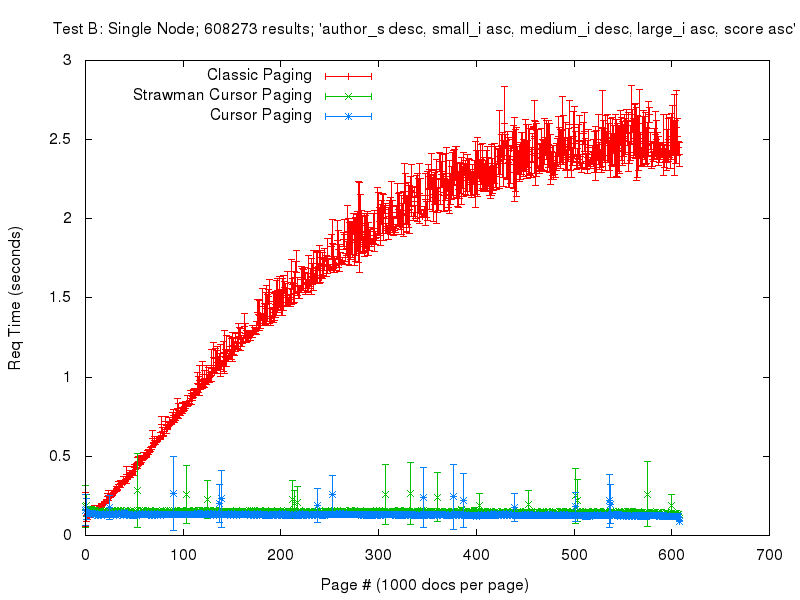

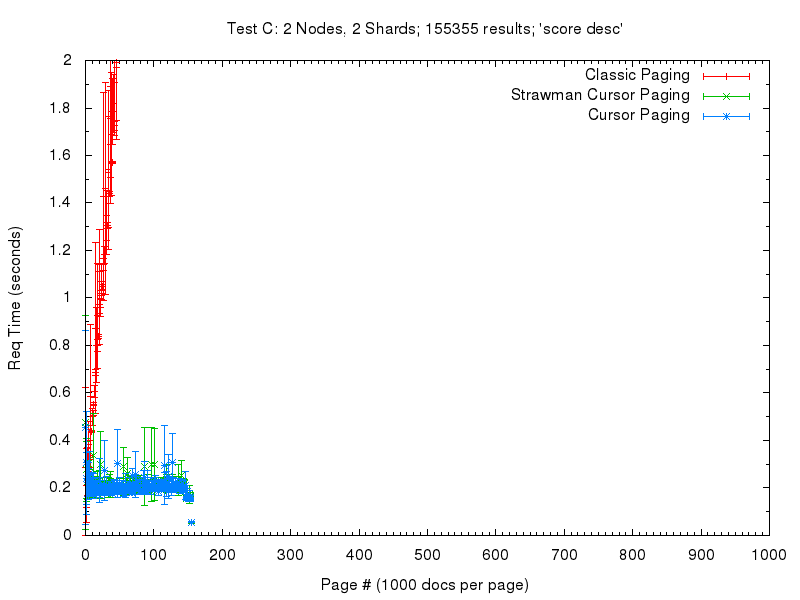

Progress on SOLR-5463 has progressed and we now have a non-strawman implementation that avoids some of the redundancies I mentioned above, so I wanted to post some additional graphs to show the comparison.

In these graphs below, the red & green lines are the same, with green being the previous Strawman based cursor code — while the new blue lines show the more final solution that will likely be committed soon.

Likewise, here are the same graphs with fixed axis for easier visual comparison with each other.

As you can see, the new implementation is a big performance improvement over the already fast strawman implementation, and doesn’t lose out to classical paging even for low page numbers.

Appendix: Code & Methodology

All of the scripts used to run these tests can be found on github, including the notes I took as I went along, showing all of the commands executed.

LEARN MORE

Contact us today to learn how Lucidworks can help your team create powerful search and discovery applications for your customers and employees.