Fusion Working for You: A Custom Sitemap Crawler JavaScript Stage

One of the most powerful features of Fusion is the built-in JavaScript stage. However, you shouldn’t really think of this stage as merely JavaScript stage. Fusion uses the Nashorn JavaScript engine, which means you have at your fingertips access to all the Java class libraries used in the application. What this means is that you can effectively script your own customized Java and/or JavaScript processing stages, and really make the Indexing Pipeline work for you, the way you want it to work.

So first off, we need to get our feet wet with Nashorn JavaScript. Nashorn (German for Rhinoceros) was released around the end of 2012. While much faster than it’s predecessor ‘Rhino’, it also incorporated one long-desired feature: The combining of Java and JavaScript. Though two entirely separate languages, these two have, as the saying goes, “had a date with destiny” for a long time.

Hello World

To start with, let us take a look at the most fundamental function in a Fusion JavaScript Stage:

function(doc){

logger.info("Doc ID: "+doc.getId());

return doc;

}

At the heart of things, this is the most basic function. Note that you will pass in a ‘doc’ argument, which will always be a PipelineDocument, and you will return that document (or, as an alternative, an array of documents, but that’s another story we’ll cover in separate article). The ‘id’ of this document will be the URL being crawled; and, thanks to the Tika Parser, the ‘body’ attribute will be the raw XML of our RSS feed.

To that end, the first thing you’ll want to do is open your Pipeline Editor and select the Apache Tika Parser. Make sure the “Return parsed content as XML or HTML” and “Return original XML and HTML instead of Tika XML output” checkboxes are checked. The Tika Parser in this case will really only be used to initially pull in the RSS XML from the feed you want to crawl. Most of the remainder of the processing will be handled in our custom JavaScript stage.

Now let’s add the stage to our Indexing Pipeline. Click “Add Stage” and select “JavaScript” stage from the menu.

Our function will operate in two phases. The first phase will pull the raw xml from the document, and use Jsoup to parse the XML and create an java.util.ArrayList of urls to be crawled. The second phase will use Jsoup to extract text from the various elements and set them in the PipelineDocument. Our function will return the ArrayList of parsed documents and send the to the Solr Indexing Stage.

So now that we’ve defined our processes, let’s show the work:

Parsing the XML, Parsing the HTML

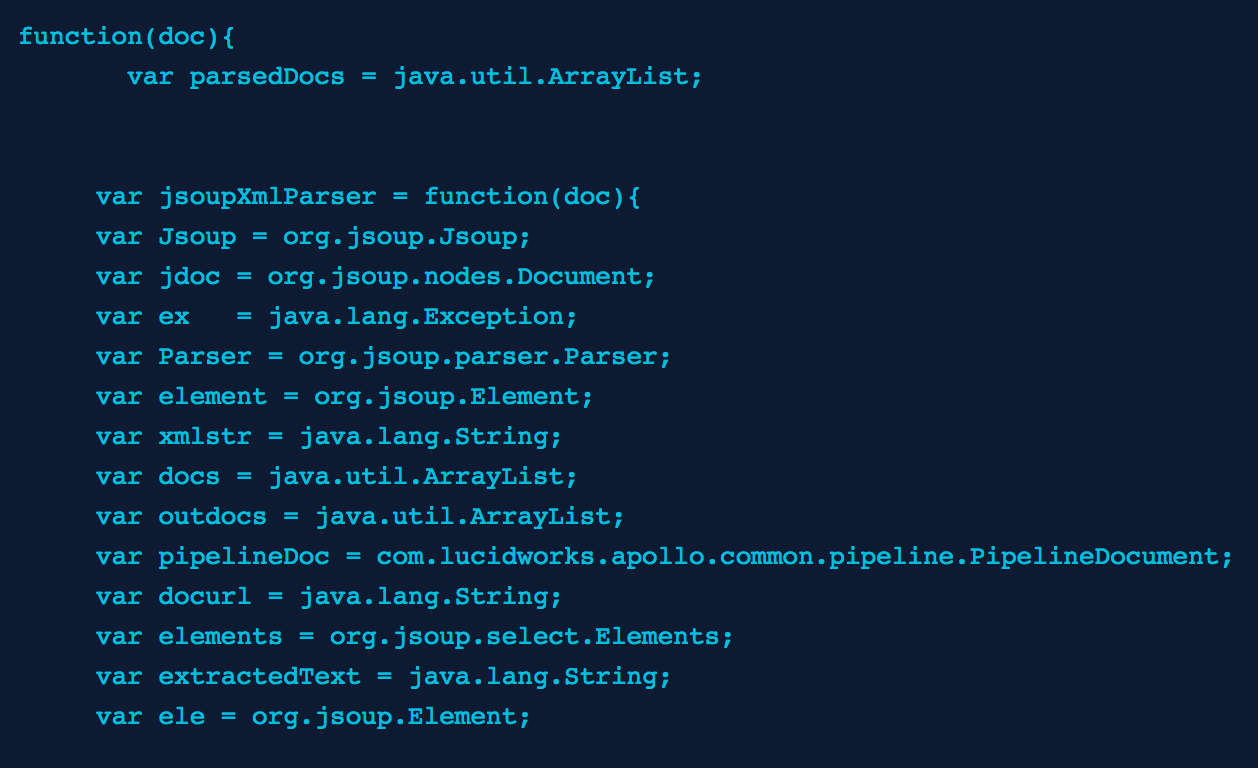

function (doc) {

var doclist = java.util.ArrayList;

var Jsoup = org.jsoup.Jsoup;

var jdoc = org.jsoup.nodes.Document;

var ex = java.lang.Exception;

var Parser = org.jsoup.parser.Parser;

var element = org.jsoup.Element;

var pipelineDoc = com.lucidworks.apollo.common.pipeline.PipelineDocument;

var xmlstr = java.lang.String;

var docurl = java.lang.String;

var elements = org.jsoup.select.Elements;

var ele = org.jsoup.Element;

var outdocs = java.util.ArrayList;

doclist = new java.util.ArrayList();

outdocs = new java.util.ArrayList();

var elementsToExtract = ["p","span","div","a"];

var targetElement = "loc";

try {

xmlstr = doc.getFirstFieldValue("body");

jdoc = Jsoup.parse(xmlstr, "", Parser.xmlParser());

for each(element in jdoc.select(targetElement)) {

docurl = element.ownText();

if (docurl !== null && docurl !== "") {

logger.info("Parsed URL: " + element.ownText());

pipelineDoc = new com.lucidworks.apollo.common.pipeline.PipelineDocument(element.ownText());

doclist.add(pipelineDoc);

}

}

} catch (ex) {

logger.error(ex);

}

try {

for each(pipelineDoc in doclist) {

docurl = pipelineDoc.getId();

jdoc = Jsoup.connect(docurl).get();

extractedText = new java.lang.String();

if (jdoc !== null) {

logger.info("FOUND a JSoup document for url " + docurl);

var extractedText = new java.lang.String();

var metaDataText = new java.lang.String();

// get the title

ele = jdoc.select("title").first();

if(ele !== null && ele.ownText){

pipelineDoc.addField("title", ele.ownText());

}

// get the meta

ele = jdoc.select("meta[keywords]").first();

if(ele !== null && ele.ownText){

pipelineDoc.addField("meta.keywords", ele.ownText());

}

ele = jdoc.select("meta[description]").first();

if(ele !== null && ele.ownText){

pipelineDoc.addField("meta.description", ele.ownText());

}

for each(var val in elementsToExtract){

elements = jdoc.select(val);

logger.info("ITERATE OVER ELEMENTS");

// then parse elements and pull just the text

for each (ele in elements) {

if (ele !== null) {

if (ele.ownText() !== null) {

extractedText += " "+ ele.ownText();

}

}

}

}

pipelineDoc.addField('body', extractedText);

logger.info("Extracted: " + extractedText);

outdocs.add(pipelineDoc);

} else {

logger.warn("Jsoup Document was NULL **** ");

}

}

} catch (ex) {

logger.error(ex);

}

return outdocs;

}

So in the above function the first step is to parse the raw XML into a Jsoup Document. From there, we iterate over the elements found in the document (jdoc.select(“loc”)) Once we have an ArrayList of PipelineDocuments (using the url for the id), we pass that on to a bit of script that loops through this list and again uses the Jsoup selector syntax to extract all the text from the elements E. g. (jdoc.select(“p”)). In this example, I create a simple JavaScript Array of 4 elements (a, p, div, span) and compile the extracted text. I also extract the title of the document, and the meta keywords/description.

Once we’ve extracted the text, we set whatever fields are relevant to our collection. For this example I created 4 fields: title,body,meta.keywords and meta.description. Note that I’ve commented out saving the raw text. You want to avoid putting raw text into your collection unless you have a specific need to do so. It’s best to just extract all the critical data/metadata and discard the raw text.

Now that we’ve populated our PipelineDocuments, the function returns the ‘outdocs’ ArrayList to the Pipeline process, and the documents are then persisted by the Solr Indexer Stage.

And that’s really all there is to it. This implementation has been tested on Fusion 2.4.2.

Best of the Month. Straight to Your Inbox!

Dive into the best content with our monthly Roundup Newsletter!

Each month, we handpick the top stories, insights, and updates to keep you in the know.