The Seven Deadly Sins of Solr

Working at Lucidworks gives me the opportunity to analyze and evaluate a great many instances of Solr implementations, running in some of the largest Fortune 500 companies as well as some of the smallest start-ups. This experience has enabled me to identify many common mistakes and pitfalls that occur, either when starting out with a new Solr implementation, or by not keeping up with the latest improvements and changes. Thanks to my colleague Simon Rosenthal for suggesting the title, and to Simon, Lance Norskog, and Tom Hill for helpful input and suggestions. So, without further ado…the Seven Deadly Sins of Solr.

Sin number 1: Sloth

Let’s define sloth as laziness or indifference. This one bites most of us at some time or another. We just can’t resist the impulse to take a shortcut, or we simply refuse to acknowledge the amount of effort required to do a task properly. Ultimately we wind up paying the price, usually with interest. Here are some common examples of how laziness or indifference lead to Solr problems.

- A general lack of commitment either to Solr or to the search application project itself. Sometimes you see this when a company has decided to switch from a commercial search application to open-source alternatives like Lucene and Solr. The engineers involved in the project are used to the “old ways” and really don’t feel like mastering another search technology. So without making even the slightest effort they will claim that Solr is inefficient, difficult to learn, not worth the effort, etc. If you’re hungry it’s usually not productive to stand around waiting for a fried chicken to fly into your mouth – your time might be better spent being a little more active in your efforts to acquire some food. Open-source software is flexible, adaptable, and powerful, but the developers that become the experts at open-source solutions are those that are not afraid to roll up their sleeves and dive in to learn what they need. Participate in the mailing lists. Open the source code. Read the javadocs and the Reference Guide. I’ve worked with customers that have embraced Solr and become experts in a fairly short amount of time, even contributing patches within a few weeks of starting their project. On the other hand I’ve seen problems fester and grow because a team just won’t put any effort into their Solr implementation. “There are none so blind as those who will not see.”

- Not reviewing, editing, or changing the default schema.xml and/or solrconfig.xml files. If I had a dollar for every production Solr instance that was statically warming the query “solr rocks” I could afford a years worth of support from a commercial search vendor. The default example configuration files are there to be used as, yes, examples, and as starting points. Take the time to learn about the configuration settings and field types, and make the best use of them. Remove anything that is not being used (how many times have you really queried that “partitioned” request handler…) Keep your configuration files lean and mean and maintainable and it will pay off in the long run.

- Ignoring the dismax query parser. I’ve seen cases where someone has written a custom query parser on their own when the work they needed to do could have easily been done with the dismax query parser. There are two different extremes to why folks sometimes avoid dismax. On one side there is the feeling that it is a “dumbed down” parser. I think part of the problem here is caused from the first line in the DismaxRequestHandler documentation (and by the way, we still suffer from this unfortunate legacy nomenclature – it is a query parser, not a request handler) which says that dismax is “designed to process simple user entered phrases”. There is sometimes a feeling that it is merely an entry-level tool for those who don’t want to do any work crafting their queries. Au contraire! Dismax has an enormous amount of power and flexibility. Which leads to the second side of “dismax avoidance”, namely that it’s “too complicated”. Indeed, it is somewhat complicated. But the rewards of spending some time to get familiar with it are substantial.

- Not enough attention on JVM settings and garbage collection. One needn’t become a JVM Jedi to run a well-tuned Solr instance, but some time spent on learning the basics about different garbage collector types and monitoring the JVM with tools such as JConsole, will pay dividends. A good starting place is a blog by my colleague Mark Miller of Lucidworks. Another good resource is this document put out by Sun.

Sin number 2: Greed

Penny-wise and pound-foolish. This is a surprisingly all-too-common trap that some fall into. Obviously not everyone has an unbounded budget, but sometimes terrible decisions are made to constrain resources, decisions that will prove to be more costly over time. For example:

- Refusal to add the proper amount of RAM to a server. There have been occasions when I’ve had more RAM on my Mac laptop (4GB) than some of the production Solr implementations I’ve seen. Sometimes even Solr projects at large companies have been under-funded. There will be business requirements that make high memory demands (sorting on large String fields, lots of faceting on fields with huge numbers of distinct terms, etc.) but the expectation will be that this can somehow be “made to work” with an insufficient amount of RAM and some kind of wizardry. A friend of mine has a saying, “You can’t fit 15 pounds of rice into a 10 pound bag.” By all means commit to at least acquiring the minimum adequate amount of resources.

- Insisting on running indexing and searching on the same host. One of the first recommendations we at Lucidworks often make to customers is to separate the indexing and searching process to (at least) two separate nodes. There are several benefits to be gained by doing this. First, the indexing and searching processes are not competing for resources (cpu, memory, etc.). Second, nodes can be configured slightly differently for optimum performance. Be sure to budget for adequate hardware based on your document count, index size, and expected query volume.

Sin number 3: Pride

Pride (for our purposes): failing to acknowledge the good work of others. An excessive love of self.

Engineers love to code. Sometimes to the point of wanting to create custom work that may have a solution in place already, just because: a) They believe they can do it better. b) They believe they can learn by going through the process. c) It “would be fun”. This is not meant to discourage new work to help out with an open-source project, to contribute bug fixes, or certainly to improve existing functionality. But be careful not to rush off and start coding before you know what options already exist. Measure twice, cut once.

- Don’t re-invent the wheel. I’ve seen developers almost look for excuses to write their own query parser or other custom component. Sometimes such effort is necessary, and luckily open-source software makes this doable in ways that would never be possible with commercial search software. But make sure you have a real need before writing custom code – at least while on the company’s dime. There is extra effort in maintaining a custom codebase and keeping it in sync with Solr, so make sure it really is the only option to solve a particular use case.

- Make use of the mailing lists and the list archives. This should be obvious, but there are still many who think that this is beneath them in some way, as if asking for help was somehow a flaw. On the other hand, when posting to the mailing lists, make efficient use of everyone’s time. Be sure to thoroughly search the list archives before posting. (Lucidworks Search Hub makes it a snap to search relevant mailing lists, documentation, blogs, javadoc, and other sources in one place.) If and when you do post a question provide a succinct description of the problem and make it clear to others what you need. Stay on topic throughout the thread. Lucene and Solr committers and Lucidworks staff are regular participants on the mailing lists, so take advantage of this resource when you have a real need.

Sin number 4: Lust

You’ll have to grant me artistic license on this one, or else we won’t be able to keep this blog G-rated. So let’s define lust as “an unnatural craving for something to the point of self-indulgence or lunacy”. OK.

- Setting the JVM Heap size too high, not leaving enough RAM for the OS. So we finally get the RAM allocation we’ve been pining for (see: Greed) and now what do we do? We’ve got 16GB of RAM on our machine now so we allocate 15GB to the heap where Solr is running. Whoa! Time for a cold shower! Solr may be the only object of your desire, but don’t neglect the operating system. Patience and attention come into play here. Monitor the JVM under load and determine what the real memory footprint of Solr is. You’ll want the operating system to be able to cache file system data (especially the Lucene indexes) so be sure to leave enough RAM for the OS.

- Too much attention on the JVM and garbage collection. On the other hand (and in direct contrast to our first bullet-point under Greed), don’t overdo it on the JVM. There are seemingly unending ways to tweak and tune a JVM. Don’t fall into the trap of trying every arcane JVM or GC setting unless you are a JVM expert. Once you have mastered the basics of the JVM and understand the differences between the different types of garbage collectors, for the most part you shouldn’t have to get too creative. Don’t just toss “-XX:CMSIncrementalDutyCycleMin=10” into the mix out of curiosity.

- Trying to “push the envelope” on auto-warm counts. How warm is too warm? We all want the fastest search response times possible, and auto-warming Solr’s queryResultCache and filterCache are important tools to help keep responses for the most popular queries as fast as possible. But let’s not get carried away. Excessive auto-warm counts can cause excessive warm-up times for the caches, which in turn affect the warm-up time of new IndexSearchers after every commit. Ask yourself if you really need to auto-warm the top 5,000 queries every time a commit occurs. It’s very easy to get obsessed with this and find that the time to warm-up a new IndexSearcher is extending beyond your commit cycles, which can lead to all kinds of odd behavior, including OutOfMemory Exceptions. Make sure you know what your average warm-up times are for all of your caches and your new IndexSearchers. It’s actually best to start out with more modest auto-warm counts and work up if necessary rather than start out too high. Create reports or database records of user queries by parsing your production log files. Use that data to get a feel for what the most popular queries are. Sometimes just setting an auto-warm count to 100 is plenty. But it takes time and effort to find the sweet spot between caches that are too cool and caches that are “en fuego”.

Sin number 5: Envy

- Wanting features that other sites have, that you really don’t need. Stay focused on your business needs. Make sure you know what you really need from your search application. A common scenario on the mailing lists is one that Lucene/Solr committer Chris Hostetter calls the “XY” problem. From the Solr user mailing list: “You are dealing with ‘X’, you are assuming ‘Y’ will help you, and you are asking about ‘Y’ without giving more details about the ‘X’ so that we can understand the full issue. Perhaps the best solution doesn’t involve ‘Y’ at all”. Know what you need and keep focused on the requirements.

- Wanting to have a bigger index than the other guy. The antithesis of the “greed” issue of not allocating enough resources. “Shooting for the moon” and trying to allow for possible growth over the next 20 years. A trap for those who believe their status is determined by the size of their server farm. By all means plan ahead, but don’t expect that you can see into the future to foresee every possible scenario. Plan smartly, but don’t overdo it.

Sin number 6: Gluttony

“Staying fit and trim” is usually good practice when designing and running Solr applications. A lot of these issues cross over into the “Sloth” category, and are generally cases where the extra effort to keep your configuration and data efficiently managed is not considered important.

- Lack of attention to field configuration in the schema. Storing fields that will never be retrieved. Indexing fields that will never be searched. Storing term vectors, positions and offsets when they will never be used. Unnecessary bloat. Understand your data and your users and design your schema and fields accordingly.

- Unexamined queries that are redundant or inefficient. I’ve seen cases where queries have been generated programmatically with a lot of redundancy and nonsensical logic. Take advantage of filter queries whenever possible. For example, if you have a query like this – &q=content:solr AND datasource:wiki AND category:search AND language:en – use filter queries on fields where it makes sense: &q=content:solr&fq=datasource:wiki&fq=category:search&fq=language:en.

Sin number 7: Wrath

While wrath is usually considered to be synonymous with anger, let’s use an older definition here: “a vehement denial of the truth, both to others and in the form of self-denial, impatience.”

- Assuming you will never need to re-index your data. It’s easy to focus on schema design, configuration, deployment, scaling issues, performance, and relevance tuning, while neglecting to consider how to re-create your index in the event of a disaster, either major or minor. One step that should never be omitted from your planning is a step to consider how to re-create your index in the case of hardware failures. If you are replicating from a master to a slave or slaves, consider having an extra slave that might not be used for searching but can receive replications of the index to serve as a backup to the master. If feasible back up your index data to other storage media. At the very least, if you don’t have a large index and can re-create it without too much effort if it is deleted or lost, make sure you have a plan and procedures in place in preparation for quickly re-indexing.

- Rushing to production. Of course we all have deadlines, but you only get one chance to make a first impression. Years ago I was part of a project where we released our search application prematurely (ahead of schedule) because the business decided it was better to have something in place rather than not have a search option. We developers felt that, with another four weeks of work we could deliver a fully-ready system that would be an excellent search application. But we rushed to production with some major flaws. Customers of ours were furious when they searched for their products and couldn’t find them. We developed a bad reputation, angered some business partners, and lost money just because it was deemed necessary to have a search application up and running four weeks early.

So keep it simple, stay smart, stay up to date, and keep your search application on the straight-and-narrow. Seek (intelligently) and ye shall find.

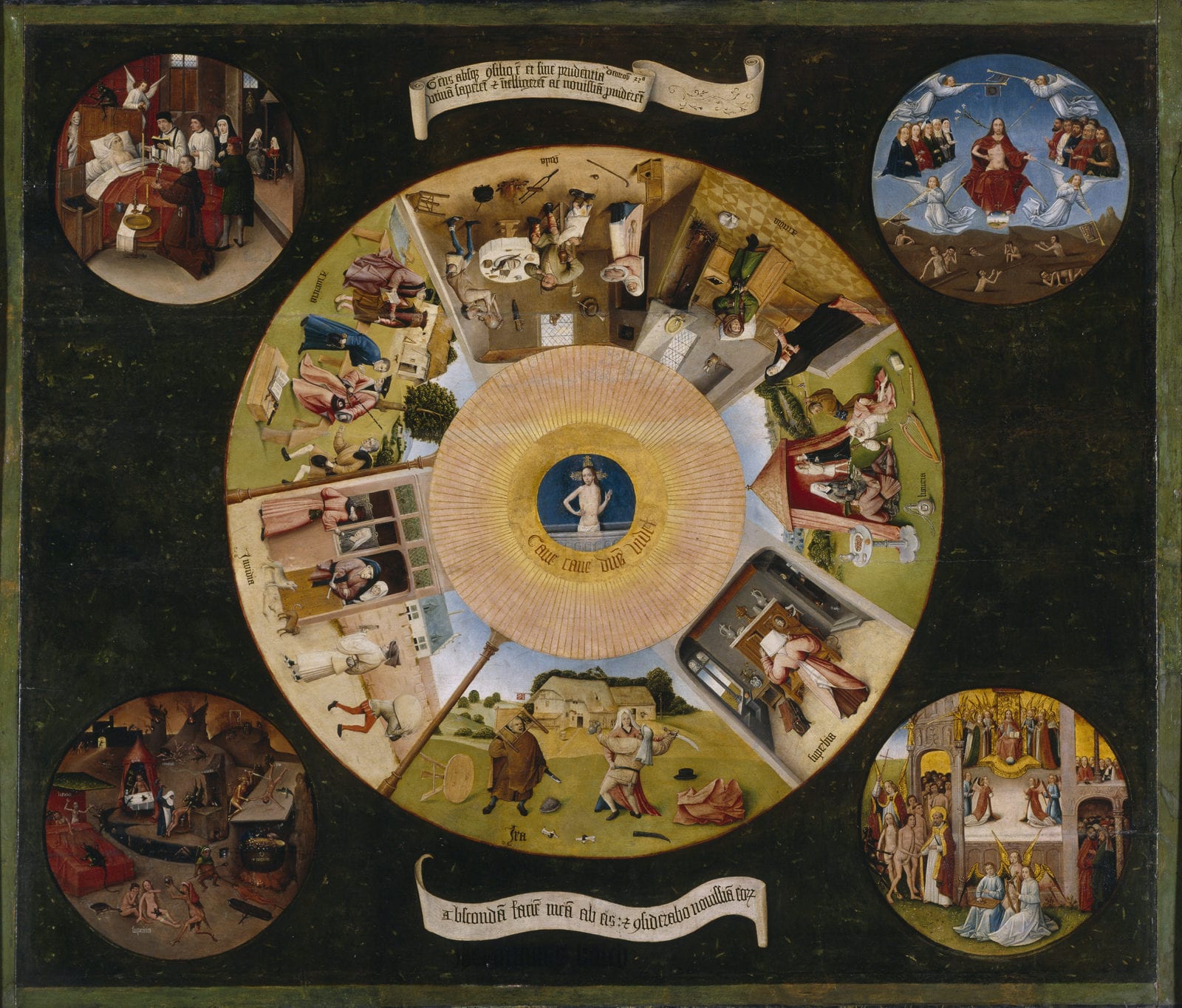

Seven Deadly Sins painting by Hieronymus Bosch

Best of the Month. Straight to Your Inbox!

Dive into the best content with our monthly Roundup Newsletter!

Each month, we handpick the top stories, insights, and updates to keep you in the know.