Getting Started with Lucene Setup

Apache Lucene is a fast, full-featured, full-text search library used in a large number of production environments. In this article, Grant Ingersoll, Lucene committer and the creator of the Lucene Boot Camp training program, walks you through the basic concepts of Lucene and shows you how to leverage the Lucene API to build full featured search capabilities for your next application.

Hello, Lucene

Apache Lucene is a Java-based, high-performance library that enables developers to easily add search capabilities to applications. Lucene has been used in many different applications ranging from large scale Internet search with hundreds of millions of documents, to eCommerce store fronts serving large volumes of users, to embedded devices.

A Brief History of Lucene

Lucene was originally created by Doug Cutting in 1997 and was made available on SourceForge in 2000. After transitioning to the Apache Software Foundation in 2001, Lucene has seen a steady increase in contributions, committers, features, and adoption into applications. Lucene is used in numerous production and research systems, as evidenced by both the number of downloads and the number of users who have self-identified.

Search 101

Before getting started using Lucene, it is important to understand some fundamentals about how the search system works and how to make content findable. While you can build a search application without this knowledge using Lucene, the following brief introduction to search concepts will allow you to better leverage Lucene, which will ultimately result in a better search application.

At it’s most basic, a search application is responsible for four things:

- Indexing Content – The process of adding content into the system such that it can be searched.

- Gathering and representing the user’s input – While the likes of Google and Yahoo! mainly provide a simple text area for inputting keywords and phrases, search applications can “go beyond the box” and offer other input features that allow the user to build richer queries. For example, date ranges, collection filters and other user interface widgets may be used to more narrowly focus the search, thus improving both speed and quality of results.

- Searching – The process of identifying and ranking documents in relation to the user’s query.

- Results Display – After the documents are ranked, the application must decide what features of the document should be displayed. Some applications show short titles and summaries in a ranked list, while others provide more information in rich and expressive interfaces. While clever user interfaces with lots of bells and whistles may be fun to design and build, make sure your users actually need those features. In the end, there is a lot to be said for simplicity.

Before indexing can take place, there is a need to understand the content, which I cover in the next section. After that I’ll examine how users factor in and then I’ll finish up with a discussion of the search process.

Your Content

It’s often said that content is king. However, in order to leverage this content, you need to understand the content. Of course, you’ve likely got thousands, if not millions, of documents, so truly understanding it all is impossible. If it wasn’t, you wouldn’t need search, right? Thus, the problem of understanding your content comes down to understanding as many features about the content as possible. Regardless of the number of documents, there are many tips and techniques that can help. I’ll cover the highlights here, but will go into more details in my articles on Findability and Improving Relevance.

To get started understanding your content, the first thing to think about is the format of the content. If it is a file, then what is its mime type? That is, Is the file text, PDF, Word, HTML or some other format? If it is anything other than plain text, the application will need to extract text from the original file in order to make it searchable. While this is outside the scope of the core Lucene capabilities, the related Apache Tika project provides extraction capabilities for many common mime types. See committer Sami Siren’s article for information on working with Tika. If the content is in a database or some other location, determine the best possible way to get at it.

Once you have the ability to extract text, it is important to understand the structure and metadata of the files. To do this, I recommend sampling a variety of documents in the set and looking for features like:

- Document Structure: Does the document contain things like: a title, a body, paragraphs, authors, price, inventory, profit margin, tables or lists? You may have to do some extra work to make use of these things, but when done properly, the user experience can be that much better.

- Special terms: Are there special words in the documents that should be boosted? Words that are bold, italics or part of links are a few examples of special terms. Phrases and proper nouns also often enhance search results.

- Synonyms and Jargon: Similar to special terms, dealing intelligently with synonyms, jargon, acronyms and abbreviations can lead to better results. You may already have a synonym list or abbreviation expansions that can be leveraged

- Priorities/Importance: Do you have any a priori knowledge about the priority or importance of a document? For instance, maybe the document is a company-wide memo from your CEO or it is highly rated by your readers or it has a really high rank according to measures like PageRank or some other link analysis algorithm. Knowing these things can really help improve relevance.

Of course, your content may have other features that will help you improve your application. Spend the time up front to understand it, and then review it from time to time as new content is brought into the system. Once the features have been identified, you can iterate on extracting and using those features in your application. As you may have noticed, I used the word iterate in that last sentence. Often time, creating a small index, doing some searches and then adding or adjusting the indexing and searching processes as new features are discovered is the best way to move forward. Of course, what good is content without users? I’ll talk about how to meet your users’ needs in the next section.

Your Users

Ah, users. They are your lifeblood. At the same time, it’s often difficult to fully understand their search needs. For instance, a user often has a deep understanding of their need, but a simple search box geared towards keyword entry is ill-equipped to deal with that need. On the flip side, users sometimes know only one or two vague terms and still expect highly relevant results. Your job, of course, is to understand and meet as many of your users’ expectations as possible, all while doing it on time and within budget.

The key to successfully completing your task is to understand what kind of users you are dealing with in the first place. Designing input interfaces for highly skilled intelligence analysts is far different from designing for “Joe User” on the Internet. Intelligence analysts are often comfortable with sophisticated interfaces that they know will yield superior results, while the average user is often so used to the likes of Google and Yahoo! that anything beyond the simple search box with support for keywords and phrases is a waste of effort.

To get a better understanding of your users, focus groups and surveys are helpful (i.e. interviewing your users), as is query log analysis. Query log analysis can give you concrete data about what users searched for, but it suffers from the classic chicken-and-egg problem, in that you have to put up a real system before you can see the results you need to design the system. Ideally, you will be able to iteratively deploy and develop your application in order to gain more feedback. See my article on improving relevance for more thoughts on query log analysis.

How Search Works in Lucene

Search, or Information Retrieval (IR), is widely studied and practiced. Theories abound on the best ways to match a user’s information need with relevant documents. While in depth discussion of these theories is beyond the scope of this article (see some of the references below for places to start), it is appropriate to discuss the basics of the theory that Lucene employs in order to gain a better understanding of what is going on underneath the hood.

Lucene, at its heart, implements a modified Vector Space Model (VSM) for search. In the VSM, both documents and queries are represented as vectors in an n-dimensional space, as seen in the following figure.

Figure 1. 2-Dimensional Example of Vector Space Model

A simple 2 dimensional drawing of the vector space model

In 2-Dimensional Example of Vector Space Model, dj is the jth document in the collection and is a tuple of words (actually they are weights for the words.) The input query q is also mapped into this space based on its words as well, thus forming an angle between the two vectors (assuming the appropriate transformations) labeled with the Greek letter theta in the figure. Recalling high school math, the cosine of theta can range between 1 and -1. Most importantly, the cosine of zero is one. Thus, in search, the most relevant documents for a query are those that return one for the cosine function.

Now, you may recall I said Lucene uses a “modified” VSM. Namely, in a pure VSM, the query is compared against all documents in the collection. In practice, this is never done because it is cost prohibitive. Instead, Lucene first looks up all those documents that contain the terms in the query (based on the Boolean logic of the query) and then does the relevance calculation on only those documents. This lookup can be done really fast in Lucene thanks to its inverted index data structure. An inverted index is very similar to the index at the back of the book. Namely, it contains a mapping between a term and the documents that contain that term, thus allowing for fast lookup of all documents containing a term. Lucene also stores position information, which it can then use to resolve phrase queries.

Naturally, there is a lot more to the picture, but it’s Lucene’s responsibility, not yours (at least not initially.) However, if you’re curious to know more, see the links to Lucene’s file formats, as well as the links to Information Retrieval theory. Let’s continue on and get started using Lucene.

Lucene Setup

Since Lucene is a Java library and not an application, there is very little to setup other than meeting the prerequisites, downloading the JAR files and adding them to your project. For prerequisites, Lucene really only has one: Java JDK 1.4 or greater (although I recommend using at least JDK 1.5.) You will also want to make sure you have a decent CPU and enough memory, but these factors are based on your application and are difficult to state explicitly. I’ve seen applications that use very little CPU and memory and I’ve seen applications that always seem to want more. See Mark Miller’s article on scaling for help in understanding machine sizing issues.

For this example, I will be using Lucid’s certified version of Apache Lucene, available from the downloads section of the Lucid website. With the download in hand, start a new project in your IDE and add the Lucene libraries.

Getting Started with Lucene

In order to understand Lucene, there are a few key concepts to grasp. These concepts can be broken into four areas, which are described in the next four sections. I’ve also included several snippets of code that help illustrate the concepts.

Documents and Fields

In Lucene, most everything revolves around the notion of a Document and its Fields. A Lucene Document is a logical grouping of some content. Documents typically represent a file or a record in a database, but this is not a requirement. Documents are made up of one or more Fields. A Field contains the actual content along with metadata describing how the content should be treated by Lucene. Fields are often things like a filename, the content of a file or a specific cell in a database. Fields can be backed by a String, Reader, byte array or a TokenStream (which I’ll cover in Analysis.) The metadata associated with a Field tells Lucene whether or not to store the raw content and whether or not to analyze the content. Lucene supports the options outlined in Lucene Field Metadata Options.

Table 1. Lucene Field Metadata Options

| Type | Description | Values |

|---|---|---|

| Storage | Lucene can be used to store the original contents of a Field, much like a database. This is independent of indexing. |

|

| Index | Indicates whether or not the content should be searchable. Also specifies whether the content should be analyzed or not. |

|

Finally, both a Document and the Fields for a Document may be boosted to indicate the importance of one Document over another or one Field over another. Lucene does not require any strict schema for a Document‘s Fields. This means one Document may have twenty Fields while another Document in the same collection may have only one Field.

As an example, if I were searching HTML pages, I likely would have one Document per HTML file, with each Document having the following Fields:

- Title – The Title of the page. I’d likely boost the title since matches in the title often indicate better results.

- Body – The main content of the page, as contained in the HTML <body> tag.

- Keywords – The list of keywords from the HTML <meta> tag, if they exist.

Example 1. Example Lucene Document Creation

HTMLDocument doc1 = new HTMLDocument("vikings.html", "Minnesota Vikings Make Playoffs!",

"The Minnesota Vikings made the playoffs by" +

" beating the New York Giants in the final game of the regular season.",

"Minnesota Vikings, New York Giants, NFL");//

Document vikesDoc = new Document();

vikesDoc.add(new Field("id", doc1.getId(), Field.Store.YES, Field.Index.NOT_ANALYZED)); //

Field titleField = new Field("title", doc1.getTitle(), Field.Store.YES, Field.Index.ANALYZED);//

titleField.setBoost(5);//

vikesDoc.add(titleField);

vikesDoc.add(new Field("body", doc1.getBody(), Field.Store.YES, Field.Index.ANALYZED));

vikesDoc.add(new Field("all", doc1.getTitle() + " " + doc1.getBody(), Field.Store.NO, Field.Index.ANALYZED));//

| Create a simple representation of an HTML document. | |

| Store and Index the id, but only allow for exact matches | |

Add the title to the Document |

|

Indicate that the title Field is more important than the other Fields |

|

Create a Field that contains both the title and the body for searching across both Fields. Notice the content is not stored. |

In the example, I first create a Document and then I add several Fields to it. Once I have a Document, I can index it, as covered by Indexing.

While the code to create a Document is relatively straightforward, the difficult part is knowing your content so that you can decide what Fields to add and how they should be treated (indexed, stored, boosted, etc.) While experience and an openness to experimentation can make a large difference, keep in mind that it is impossible to have a perfect retrieval engine due to the subjective nature of the problem. In the end, try to strike a balance between covering every possible search scenario and the need to finish your project!

Indexing

Indexing is the process of mapping Documents into Lucene’s internal data structures. During the indexing process, Lucene uses the Document, Fields and metadata to determine how to add the content to the index. In Lucene, an index is a collection of one or more Documents and is represented by the abstract Directory class. Directorys in Lucene can be memory-based (RAMDirectory) or file-based (FSDirectory and others). Most large indexes will require a file-based Directory implementation.

The main class for interacting with Lucene’s indexing process is the IndexWriter. It provides several constructors for instantiation and two options for adding Documents to the index. The IndexWriter can create or append to a Directory, but there can only ever be one IndexWriter open and writing to a Directory at a time. As an example of indexing, see the sample code.

Example 2. Sample IndexWriter Usage

RAMDirectory directory = new RAMDirectory();//

1

Analyzer analyzer = new StandardAnalyzer();//

IndexWriter writer = new IndexWriter(directory, analyzer, true, IndexWriter.MaxFieldLength.LIMITED);//

writer.addDocument(vikesDoc); //

writer.addDocument(luceneDoc); //

for (int i = 0; i < 10; i++){//

Document doc = new Document();

doc.add(new Field("id", "page_" + i + ".html", Field.Store.YES, Field.Index.NOT_ANALYZED));

doc.add(new Field("title", "Vikings page number: " + i, Field.Store.YES, Field.Index.ANALYZED));

doc.add(new Field("body", "This document is number: " + i + " about the Vikings.", Field.Store.YES,

Field.Index.ANALYZED));

doc.add(new Field("all", "Vikings page number: " + i + " This document is number: " + i

+ " about the Minnesota Vikings.", Field.Store.NO, Field.Index.ANALYZED));

writer.addDocument(doc);

}

writer.optimize();//

writer.close();//

Instantiate a memory-based Directory. This index is transient. |

|

Create an Analyzer to be used by the IndexWriter for creating Tokens. |

|

Create the IndexWriter responsible for indexing the content. |

|

| Add the Vikings document to the writer. | |

| Add a second document to the writer | |

| For good measure, add in some other documents. | |

| Optional. Optimize the index for searching. | |

| Close the IndexWriter. Content won’t be searchable until close() is called (in this scenario). |

In this example, I first create a RAMDirectory to store the internal Lucene data structures. Next, I create a StandardAnalyzer for use when analyzing the content. For now, don’t worry about what the StandardAnalyzer does. I’ll cover it in the analysis section below. After this, I construct an IndexWriter, passing in my Directory, Analyzer and a boolean to tell the writer to create the index (as opposed to appending to it) and finally a parameter that establishes a cap on the number of tokens (in this case, the default of 10,000) per Field.

After adding a few Documents in the example, I optimize the index and then close the IndexWriter. Closing the writer flushes all the files to the Directory and closes any internal resources in use. This will become important later when I discuss searching. As for the optimize call, Lucene writes out its data structures into small chunks, called segments. The optimize call merges these segments into a single segment. This is an optimization for search, since it is faster to open and read from a single segment. Whether or not you optimize, the search results for a given query should be exactly the same.

That really is all you need to know to get started indexing. There are, of course, more options for tuning and tweaking the indexing process. To learn more about these and other indexing options see the resources below, in particular, Lucene In Action. For now, however, I’m going to move on to the search process.

Searching

Searching in Lucene is powered by the Searcher abstract class and its various implementations. The Searcher takes in a Query instance and returns a set of results, usually represented by the TopDocs class, but other options exist. The Query class is an abstract representation of the user’s input query. There are many derived classes of Query that come with Lucene, the most often used being the TermQuery, BooleanQuery and PhraseQuery. Queries can be built up by combining the various instances together using the BooleanQuery and other similar grouping queries that I’m not going to cover here. See the Javadocs for more on the various Query implementations. Creating queries is often handled by a class that automates this process. In Lucene, the QueryParser is a JavaCC-based grammar which can be used to create Querys from user input using a specific syntax. (See Query Parser Syntax.) Solr also comes with some alternate query parsers and you may well find that you need to implement your own parsing capabilities depending on the syntax you wish to support. Enough talk, though, as an example will help illustrate the concepts:

Example 3. Search Example

Searcher searcher = new IndexSearcher(directory);//

QueryParser qp = new QueryParser("all", analyzer);//

Query query = qp.parse("body:Vikings AND Minnesota"); //

TopDocs results = searcher.search(query, 10);//

printResults(query, searcher, results);

query = qp.parse("Lucene");

results = searcher.search(query, 10);

printResults(query, searcher, results);

Construct a Searcher for searching the index. |

|

The Lucene QueryParser can be used for converting a user’s input query into a Query. The constructor takes a default search Field and the Analyzer to use to create Tokens. |

|

The parse does the actual work of constructing a Query. |

|

| Execute the search, requesting at most 10 results |

As a continuation of the indexing example, I create an IndexSearcher using the same Directory I used during indexing. Next, I create a QueryParser instance, passing in the same Analyzer I used for indexing and the name of the default Field to search if no Field is specified in the query. Once I have a Query, I submit the search request and print out the results. Just for grins, I then submit another Query and print out the results. When I run this code, I get the following output (abbreviated for display):

Query: +body:vikings +all:minnesota

Total Hits: 11

Doc: Document<stored/uncompressed,indexed,tokenized<body:This document is number: 0 about the Vikings.> >

Doc: Document<stored/uncompressed,indexed,tokenized<body:This document is number: 1 about the Vikings.> >

Doc: Document<stored/uncompressed,indexed,tokenized<body:This document is number: 2 about the Vikings.> >

Doc: Document<stored/uncompressed,indexed,tokenized<body:This document is number: 3 about the Vikings.> >

Doc: Document<stored/uncompressed,indexed,tokenized<body:This document is number: 4 about the Vikings.> >

Doc: Document<stored/uncompressed,indexed,tokenized<body:This document is number: 5 about the Vikings.> >

Doc: Document<stored/uncompressed,indexed,tokenized<body:This document is number: 6 about the Vikings.> >

Doc: Document<stored/uncompressed,indexed,tokenized<body:This document is number: 7 about the Vikings.> >

Doc: Document<stored/uncompressed,indexed,tokenized<body:This document is number: 8 about the Vikings.> >

Doc: Document<stored/uncompressed,indexed,tokenized<body:This document is number: 9 about the Vikings.> >

Query: all:lucene

Total Hits: 1

Doc: Document<stored/uncompressed,indexed,tokenized<body:Apache Lucene is a fast, Java-based search library.>>Notice, in my example, that I did not create a new Searcher to do this second Query. This is, in fact, an important concept in Lucene. When you create a Searcher, you are opening a point in time snapshot of the index. (Actually, the Searcher is delegating the opening of the index to the IndexReader class.) As an example, if you create a new index and add 10 documents to it at 3 PM on a given day, open a Searcher immediately afterward and then add in 10,000 documents at 3:01 PM, that Searcher will have no knowledge of those 10,000 new Documents. The reason for this is that opening an index is due to Lucene opening a point in time snapshot, which can be expensive. However, it is very fast to search once it is open, so a best practice is to open the Searcher and then cache it. Depending on your business needs, reopen the Searcher when appropriate. For many applications, this is completely satisfactory, since the index is not changing more often than every five minutes or so and a small delay in refresh is acceptable since users will never notice. For those with real time needs, there are techniques available, but that discussion is not appropriate for this article, as it requires development work.

Other things to notice in the search example include:

- The total number of hits is often different from the number of results returned. The total hits is a property of the

TopDocsand represents all matches, while the number of results returned is specified by the length of theScoreDocsarray off ofTopDocs. - The input query of body:Vikings AND Minnesota results in a

BooleanQuerywith two required terms, one against the bodyFieldand one against the allField, since it is the defaultField. The “+” sign means the term is required to appear in theDocument.

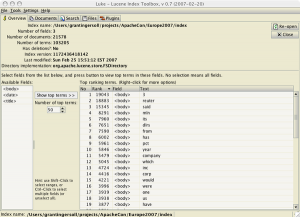

At some point during your indexing and searching development, you will want to get a better idea of what is in your index. For this, Andrzej Bialecki has developed a tool named Luke. Luke allows you to open an index and examine documents, tokens, and file formats, as well as submit searches for testing.

Figure 2. Luke Screenshot

A sample screenshot from Luke showing the list of most frequently occuring terms

At this point, I’ve demonstrated the basics of Lucene’s API. From here, I’ll take a look at the role of analysis in indexing and searching. For those wanting more information on searching, see the resources below.

Analysis

Analysis is used during both indexing and search to create tokens to be added, or looked up in the index. Analysis is also the place where operations to add, delete or modify tokens take place. At its heart, analysis is the process that transforms your content into something searchable.

The analysis process is controlled by the Analyzer class. An Analyzer is made up of a Tokenizer and zero or more TokenFilters. The Tokenizer works with the input and breaks it up into tokens. For example, Lucene ships with the WhitespaceTokenizer, which, as you might guess, breaks up the input on whitespace. After tokenization, the tokens are handed to the chain of TokenFilters, which can choose to add, modify or delete a token. As an example, the LowerCaseFilter converts all the characters in a token to lowercase, which allows matching in a case-insensitive manner. As an example of a complete Analyzer, the StopAnalyzer consists of:

- LowerCaseTokenizer – Splits on non-letters and then lowercases

- StopFilter – Removes all stopwords. Stopwords are commonly occurring words that add little value to search, like “the”, “a”, and “an”.

As your Lucene experience grows, you will likely come up with your own extension to the Analyzer class to meet your needs, but for starters, I recommend using the StandardAnalyzer, as it does a pretty good job in most free-text situations. To see the full set of Analyzers, Tokenizers and TokenFilters available, consult the Lucene Javadocs.

To demonstrate some of the different results of analysis, I put together the following example:

Example 4. Analysis Example

String test1 = "Dear Sir, At Acme Widgets, our customer's happiness is priority one.";//

Analyzer analyzer = new WhitespaceAnalyzer();//

printTokens("Whitespace", analyzer.tokenStream("", new StringReader(test1)));

analyzer = new SimpleAnalyzer();//

printTokens("Simple", analyzer.tokenStream("", new StringReader(test1)));

analyzer = new StandardAnalyzer();//

printTokens("Standard", analyzer.tokenStream("", new StringReader(test1)));

analyzer = new SnowballAnalyzer("English");//

printTokens("English Snowball", analyzer.tokenStream("", new StringReader(test1)));

| Create a string for analysis that has some punctuation, capital letters, etc., and see how different analyzers treat it. | |

| Create the WhitespaceAnalyzer, which splits on whitespace and does nothing else | |

| Try out the SimpleAnalyzer, which splits on non-letters and then lowercases. | |

| Instantiate the StandardAnalyzer, which uses a JavaCC-based grammar to determine tokenization and then lowercases and removes stopwords. | |

| The SnowballAnalyzer is similar to the StandardAnalyzer, but adds a step to stem the tokens. |

In the Analysis Example, I construct several different Analyzers, and then process the same string through each of them, printing out the tokens. For instance, the output from the SimpleAnalyzer looks like:

Analyzer: Simple

Token[0] = (dear,0,4)

Token[1] = (sir,5,8)

Token[2] = (at,10,12)

Token[3] = (acme,13,17)

Token[4] = (widgets,18,25)

Token[5] = (our,27,30)

Token[6] = (customer,31,39)

Token[7] = (s,40,41)

Token[8] = (happiness,42,51)

Token[9] = (is,52,54)

Token[10] = (priority,55,63)

Token[11] = (one,64,67)Meanwhile, the output from the StandardAnalyzer looks like:

Analyzer: Standard

Token[0] = (dear,0,4,type=<ALPHANUM>)

Token[1] = (sir,5,8,type=<ALPHANUM>)

Token[2] = (acme,13,17,type=<ALPHANUM>)

Token[3] = (widgets,18,25,type=<ALPHANUM>)

Token[4] = (our,27,30,type=<ALPHANUM>)

Token[5] = (customer,31,41,type=<APOSTROPHE>)

Token[6] = (happiness,42,51,type=<ALPHANUM>)

Token[7] = (priority,55,63,type=<ALPHANUM>)

Token[8] = (one,64,67,type=<ALPHANUM>)Notice that the StandardAnalyzer removed stopwords like “at” and “is”, plus it handled the apostrophe in “customer’s” appropriately as well, while the SimpleAnalyzer lowercased the tokens and split “customer’s” into “customer” and “s”. The full analysis output is included below.

As I said earlier, analysis is used during both indexing and searching. On the indexing side, analysis produces tokens to be stored in the index. On the query side, analysis produces tokens to be looked up in the index. Thus, it is very important that the type of tokens produced during indexing match those produced during search. For example, if you lowercase all tokens during indexing, but not during search, then search terms with uppercase characters will not have any matches. This does not, however, mean that analysis has to be exactly the same during both indexing and searching. For instance, many people choose to add in synonyms during search, but not during indexing. Even in this case, though, the tokens are similar in form.

Finally, when it comes to choosing or building an Analyzer, I strongly recommend running as much data as you can stand (randomly sampled) through your Analyzer and inspecting the output. Tools like Luke can also help you understand what is in your index. Additionally, over time, take a look at your query logs and do an analysis of all queries that returned zero results and see if there were mismatches in analysis.

That should give you a good start on understanding analysis and some of the issues involved. From here, I’m going to wrap things up with some final thoughts and let you go explore Lucene.

Next Steps

In this article, I walked through the basics of Lucene and showed you how to index, analyze and search your content. Hopefully, I also planted some ideas that will help make your development with Lucene easy and effective. Since this is a Getting Started article, I’ve left off details of how to put Lucene into production. As a next step in your progression with Lucene, I recommend you try building out a simple application that searches your content. During that process, refer back here and to the resources section below to help fill in your knowledge.

Lucene Resources

-

- Learn how to make your content findable.

- Learn tips and techniques for improving Relevance.

- Learn how to extract common file formats from Sami Siren in Introducing Apache Tika.

- Learn more about the Apache Software Foundation

- Vist the Lucene website to find more Lucene resources.

- Learn about Lucene File Formats

- Learn about Lucene’s Query Parser Syntax

- Visit the Lucene wiki for more community information.

- Learn and use Luke for debugging your Lucene development.

- Refer to the Lucene Javadocs for help in understanding the Lucene APIs

- Learn how others are using Lucene on the Powered By Lucene page.

- Get tips and discussion on Scaling Lucene and Solr.

- Learn more about Lucene from the Lucene bible: Lucene In Action (2nd Edition) by Erik Hatcher, Mike McCandless and Otis Gospodnetic.

- Attend Grant Ingersoll’s next Lucene Boot Camp at ApacheCon.

- Learn more about Lucene and Solr terminology in Lucid’s Glossary.

Information Retrieval Theory

The following is an incomplete list of resources to help you learn more about Information Retrieval (IR).

- Wikipedia article on Vector Space Model

- SIGIR – The preeminent society for research in IR.

- Introduction to Information Retrieval by Christopher D. Manning, Prabhakar Raghavan, Hinrich Schütze

- Information Retrieval: Algorithms and Heuristics (The Information Retrieval Series)(2nd Edition) by David Grossman and Ophir Frieder

LuceneExample.java

Example 5. Lucene Example code

import org.apache.lucene.store.RAMDirectory;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.Query;

import org.apache.lucene.search.TopDocs;

import org.apache.lucene.search.Searcher;

import org.apache.lucene.queryParser.QueryParser;

import org.apache.lucene.queryParser.ParseException;

import java.io.IOException;

public class LuceneExample {

public static void main(String[] args) throws IOException, ParseException {

//<start id="LE.doc.creation"/>

HTMLDocument doc1 = new HTMLDocument("vikings.html", "Minnesota Vikings Make Playoffs!",

"The Minnesota Vikings made the playoffs by" +

" beating the New York Giants in the final game of the regular season.",

"Minnesota Vikings, New York Giants, NFL");//

Document vikesDoc = new Document();

vikesDoc.add(new Field("id", doc1.getId(), Field.Store.YES, Field.Index.NOT_ANALYZED)); //

Field titleField = new Field("title", doc1.getTitle(), Field.Store.YES, Field.Index.ANALYZED);//

titleField.setBoost(5);//

vikesDoc.add(titleField);

vikesDoc.add(new Field("body", doc1.getBody(), Field.Store.YES, Field.Index.ANALYZED));

vikesDoc.add(new Field("all", doc1.getTitle() + " " + doc1.getBody(), Field.Store.NO, Field.Index.ANALYZED));//

/*

<calloutlist>

<callout arearefs="LE.doc.creation.seed"><para>Create a simple representation of an HTML document.</para></callout>

<callout arearefs="LE.doc.creation.id"><para>Store and Index the id, but only allow for exact matches</para></callout>

<callout arearefs="LE.doc.creation.title"><para>Add the title to the <classname>Document</classname></para></callout>

<callout arearefs="LE.doc.creation.title.boost"><para>Indicate that the title <classname>Field</classname> is more important than the other <classname>Field</classname>s</para></callout>

<callout arearefs="LE.doc.creation.all"><para>Create a <classname>Field</classname> that contains both the title and the body for searching across both <classname>Field</classname>s. Notice the content is not stored.</para></callout>

</calloutlist>

*/

//<end id="LE.doc.creation"/>

HTMLDocument doc2 = new HTMLDocument("lucene.html", "Apache Lucene: The fun never stops!",

"Apache Lucene is a fast, Java-based search library.",

"Apache, Lucene, Java, search, information retrieval");

Document luceneDoc = new Document();

luceneDoc.add(new Field("id", doc2.getId(), Field.Store.YES, Field.Index.NOT_ANALYZED));

titleField = new Field("title", doc2.getTitle(), Field.Store.YES, Field.Index.ANALYZED);

titleField.setBoost(5);

luceneDoc.add(titleField);

luceneDoc.add(new Field("body", doc2.getBody(), Field.Store.YES, Field.Index.ANALYZED));

luceneDoc.add(new Field("all", doc2.getTitle() + " " + doc2.getBody(), Field.Store.NO, Field.Index.ANALYZED));

//<start id="idx"/>

RAMDirectory directory = new RAMDirectory();//

Analyzer analyzer = new StandardAnalyzer();//

IndexWriter writer = new IndexWriter(directory, analyzer, true, IndexWriter.MaxFieldLength.LIMITED);//

writer.addDocument(vikesDoc); //

writer.addDocument(luceneDoc); //

for (int i = 0; i < 10; i++){//

Document doc = new Document();

doc.add(new Field("id", "page_" + i + ".html", Field.Store.YES, Field.Index.NOT_ANALYZED));

doc.add(new Field("title", "Vikings page number: " + i, Field.Store.YES, Field.Index.ANALYZED));

doc.add(new Field("body", "This document is number: " + i + " about the Vikings.", Field.Store.YES,

Field.Index.ANALYZED));

doc.add(new Field("all", "Vikings page number: " + i + " This document is number: " + i

+ " about the Minnesota Vikings.", Field.Store.NO, Field.Index.ANALYZED));

writer.addDocument(doc);

}

writer.optimize();//

writer.close();//

/* <calloutlist> <callout arearefs="idx.ram"><para>Instantiate a memory-based <classname>Directory</classname>. This index is transient.</para></callout> <callout arearefs="idx.analyzer"><para>Create an <classname>Analyzer</classname> to be used by the <classname>IndexWriter</classname> for creating <classname>Token</classname>s.</para></callout> <callout arearefs="idx.index.writer"><para>Create the <classname>IndexWriter</classname> responsible for indexing the content.</para></callout> <callout arearefs="idx.vikes"><para>Add the Vikings document to the writer.</para></callout> <callout arearefs="idx.lucene"><para>Add a second document to the writer</para></callout> <callout arearefs="idx.other"><para>For good measure, add in some other documents.</para></callout> <callout arearefs="idx.optimize"><para>Optional. Optimize the index for searching.</para></callout> <callout arearefs="idx.close"><para>Close the IndexWriter. Content won't be searchable until close() is called (in this scenario).</para></callout> </calloutlist> */ //<end id="idx"/> //<start id="search"/> Searcher searcher = new IndexSearcher(directory);//

QueryParser qp = new QueryParser("all", analyzer);//

Query query = qp.parse("body:Vikings AND Minnesota"); //(16)

TopDocs results = searcher.search(query, 10);//(17)

printResults(query, searcher, results);

query = qp.parse("Lucene");

results = searcher.search(query, 10);

printResults(query, searcher, results);

/*

<calloutlist>

<callout arearefs="search.srchr"><para>Construct a <classname>Searcher</classname> for searching the index.</para></callout>

<callout arearefs="search.qp"><para>The Lucene <classname>QueryParser</classname> can be used for converting a user's input query into a <classname>Query</classname>. The constructor takes a default search <classname>Field</classname> and the <classname>Analyzer</classname> to use to create <classname>Token</classname>s.</para></callout>

<callout arearefs="search.parse"><para>The <methodname>parse</methodname> does the actual work of constructing a <classname>Query</classname>.</para></callout>

<callout arearefs="search.search"><para>Execute the search, requesting at most 10 results</para></callout>

</calloutlist>

*/

//<end id="search"/>

}

private static void printResults(Query query, Searcher searcher, TopDocs results) throws IOException {

System.out.println("Query: " + query);

System.out.println("Total Hits: " + results.totalHits);

for (int i = 0; i < results.scoreDocs.length; i++){

System.out.println("Doc: " + searcher.doc(results.scoreDocs[i].doc));

}

}

}

Note: This example contains comments that are used by an automated document generation system, so please ignore tags like <callout> and <co>, etc. To compile it, you will need the lucene-core-2.4.0.jar library.

AnalysisExample.java

Example 6. Analysis Example Code

import java.io.IOException;

import org.apache.lucene.analysis.SimpleAnalyzer;

import org.apache.lucene.analysis.TokenStream;

import org.apache.lucene.analysis.Token;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.analysis.WhitespaceAnalyzer;

import org.apache.lucene.analysis.snowball.SnowballAnalyzer;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import java.io.StringReader;

public class AnalysisExample {

public static void main(String[] args) throws IOException {

//<start id="analysis"/>

String test1 = "Dear Sir, At Acme Widgets, our customer's happiness is priority one.";//

Analyzer analyzer = new WhitespaceAnalyzer();//

printTokens("Whitespace", analyzer.tokenStream("", new StringReader(test1)));

analyzer = new SimpleAnalyzer();//

printTokens("Simple", analyzer.tokenStream("", new StringReader(test1)));

analyzer = new StandardAnalyzer();//

printTokens("Standard", analyzer.tokenStream("", new StringReader(test1)));

analyzer = new SnowballAnalyzer("English");//

printTokens("English Snowball", analyzer.tokenStream("", new StringReader(test1)));

/*

<calloutlist>

<callout arearefs="analysis.str"><para>Create a string for analysis that has some punctuation, capital letters, etc., and see how different analyzers treat it.</para></callout>

<callout arearefs="analysis.white"><para>Create the WhitespaceAnalyzer, which splits on whitespace and does nothing else</para></callout>

<callout arearefs="analysis.simple"><para>Try out the SimpleAnalyzer, which splits on non-letters and then lowercases.</para></callout>

<callout arearefs="analysis.standard"><para>Instantiate the StandardAnalyzer, which uses a JavaCC-based grammar to determine tokenization and then lowercases and removes stopwords.</para></callout>

<callout arearefs="analysis.snow"><para>The SnowballAnalyzer is similar to the StandardAnalyzer, but adds a step to stem the tokens.</para></callout>

</calloutlist>

*/

//<end id="analysis"/>

}

private static void printTokens(String name, TokenStream tokenStream) throws IOException {

System.out.println("Analyzer: " + name);

Token token = new Token();

int i = 0;

while ((token = tokenStream.next(token)) != null) {

System.out.println("Token[" + i + "] = " + token);

i++;

}

}

}

Note: This example contains comments that are used by an automated document generation system, so please ignore tags like <callout> and <co>, etc. To compile it, you will need the lucene-core-2.4.0.jar, lucene-analyzers-2.4.0.jar and lucene-snowball-2.4.0.jar library.

Output from AnalysisExample.java

I get the following output when I run the analysis example.

Analyzer: Whitespace

Token[0] = (Dear,0,4)

Token[1] = (Sir,,5,9)

Token[2] = (At,10,12)

Token[3] = (Acme,13,17)

Token[4] = (Widgets,,18,26)

Token[5] = (our,27,30)

Token[6] = (customer’s,31,41)

Token[7] = (happiness,42,51)

Token[8] = (is,52,54)

Token[9] = (priority,55,63)

Token[10] = (one.,64,68)

Analyzer: Simple

Token[0] = (dear,0,4)

Token[1] = (sir,5,8)

Token[2] = (at,10,12)

Token[3] = (acme,13,17)

Token[4] = (widgets,18,25)

Token[5] = (our,27,30)

Token[6] = (customer,31,39)

Token[7] = (s,40,41)

Token[8] = (happiness,42,51)

Token[9] = (is,52,54)

Token[10] = (priority,55,63)

Token[11] = (one,64,67)

Analyzer: Standard

Token[0] = (dear,0,4,type=<ALPHANUM>)

Token[1] = (sir,5,8,type=<ALPHANUM>)

Token[2] = (acme,13,17,type=<ALPHANUM>)

Token[3] = (widgets,18,25,type=<ALPHANUM>)

Token[4] = (our,27,30,type=<ALPHANUM>)

Token[5] = (customer,31,41,type=<APOSTROPHE>)

Token[6] = (happiness,42,51,type=<ALPHANUM>)

Token[7] = (priority,55,63,type=<ALPHANUM>)

Token[8] = (one,64,67,type=<ALPHANUM>)

Analyzer: English Snowball

Token[0] = (dear,0,4,type=<ALPHANUM>)

Token[1] = (sir,5,8,type=<ALPHANUM>)

Token[2] = (at,10,12,type=<ALPHANUM>)

Token[3] = (acm,13,17,type=<ALPHANUM>)

Token[4] = (widget,18,25,type=<ALPHANUM>)

Token[5] = (our,27,30,type=<ALPHANUM>)

Token[6] = (custom,31,41,type=<APOSTROPHE>)

Token[7] = (happi,42,51,type=<ALPHANUM>)

Token[8] = (is,52,54,type=<ALPHANUM>)

Token[9] = (prioriti,55,63,type=<ALPHANUM>)

Token[10] = (one,64,67,type=<ALPHANUM>)Comments? Mail us at comments@lucidimagination.com

Best of the Month. Straight to Your Inbox!

Dive into the best content with our monthly Roundup Newsletter!

Each month, we handpick the top stories, insights, and updates to keep you in the know.