Advanced Spell Check With Fusion 4

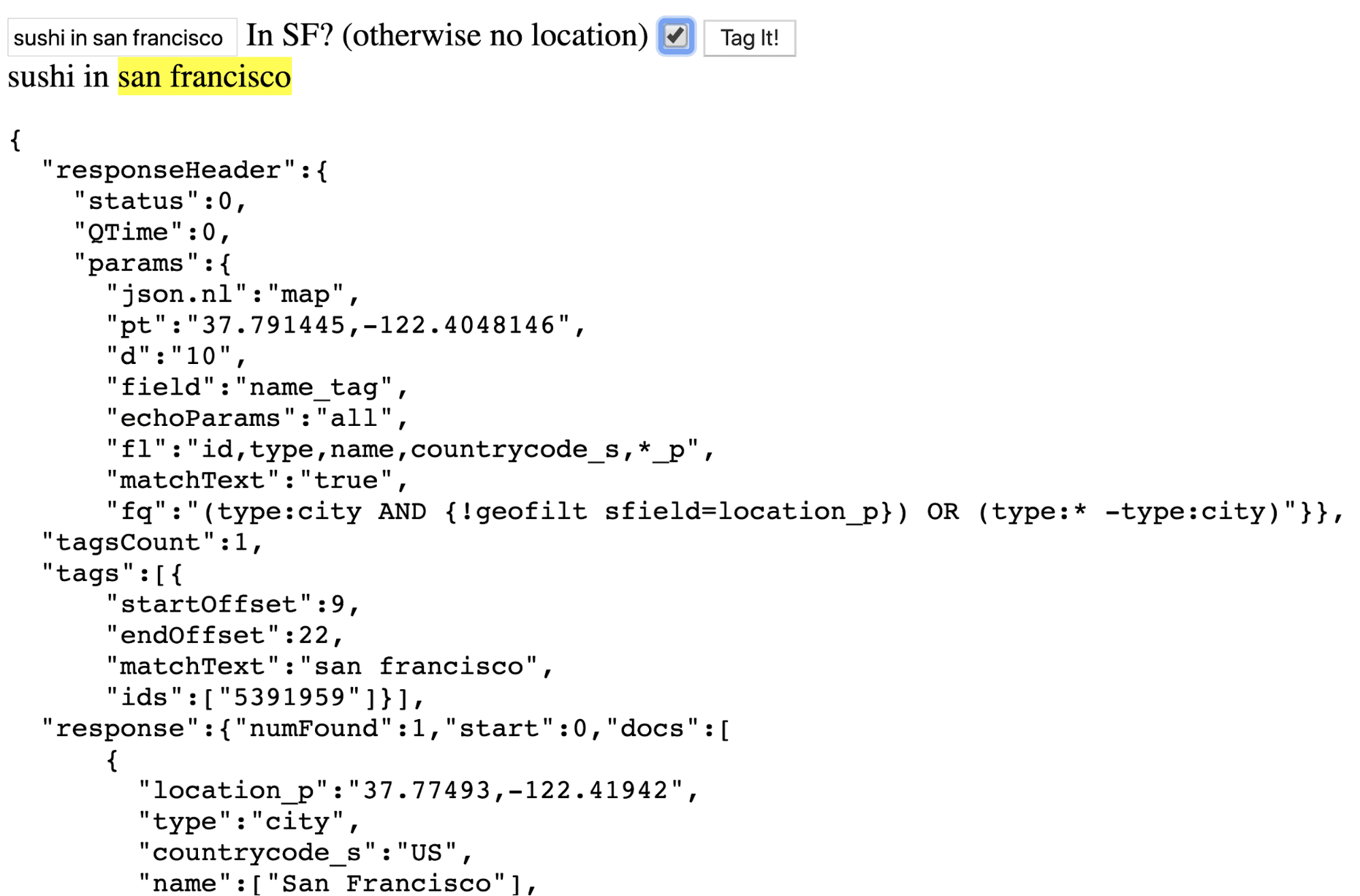

Let’s look at how we can extend and improve spell checking within Fusion 4 by utilizing the number of occurrences of words in queries or documents to find misspellings. For example, if two queries are spelled similarly, but one leads to a lot of traffic (head) and the other leads to a few or zero traffic (tail), then very likely the tail query is misspelled and the head query is the correct spelling.

The “Token and phrase spell correction” job in Fusion 4 extracts tail tokens (one word) and phrases (two words) and finds similarly-spelled head tokens/phrases. If there are several matching heads found for each tail, the job can compare and pick the best correction using multiple configurable criteria.

How to Run Spell Correction Jobs in Fusion 4:

We will be using an ecommerce dataset from Kaggle to show how to perform spell correction based on signals.

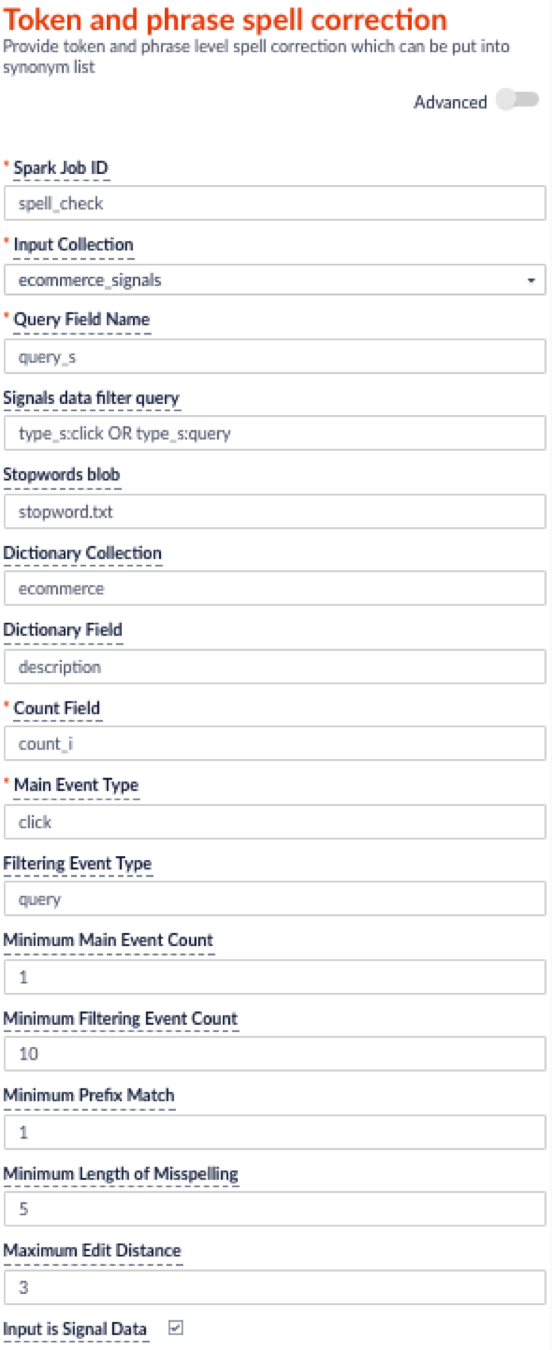

In Fusion’s jobs manager, add a new “token and phrase spell correction” job and fill in the parameters as follows:

You can run the spell checker job on two types of data: signal data or non-signal data. If you are interested in finding misspellings in queries from signals, then check the “Input is Signal Data” box.

The configuration must specify:

- Which collection contains the signals (the Input Collection parameter)

- Which field in the collection contains the query string (the Query Field Name parameter)

- Which field contains the count of the event (for example, if signal data follows the default Fusion setup, count_i is the field that records the count of raw signal, aggr_count_i is the field that records the count after aggregation)

The job allows you to analyze query performance based on two different events: main event and filtering/secondary event. For example, if you specify the main event to be clicks with a minimum count of 0 and the filtering event to be queries with a minimum count of 20, then the job will filter on the queries that get searched at least 20 times and check among those popular queries to see which ones didn’t get clicked at all or only a few times. If you only have one event type, leave the Filtering Event Type parameter empty. You can also upload your dictionary to a collection to compare the spellings against to and specify the location of dictionary in Dictionary Collection and Dictionary Field parameter. For example, in ecommerce use cases, the catalog can serve as a dictionary, and the job will check to make sure the misspellings found do not show up in the dictionary, while the corrections do show up.

If you are interested in finding misspellings in content documents (such as descriptions) rather than queries, then un-check the “Input is Signal Data” box. And there is no need to specify the parameters mentioned above for the signal data use case.

After specifying the configuration, click Run > Start. When the run finishes, you should see the status Success to the left of the Start button. If the run fails, you should check the error messages in “job history”. If the job history doesn’t give you insight into what went wrong, then you can debug by submitting the following curl command in a terminal:

tail -f var/log/api/spark-driver-default.log | grep Misspelling:

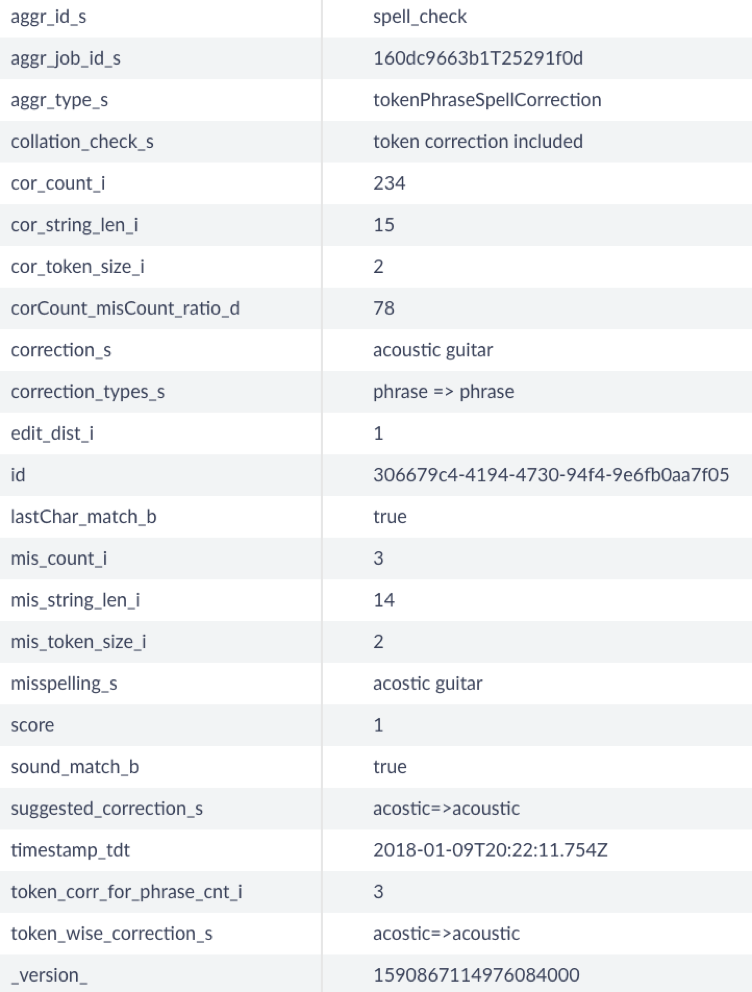

After the run finishes, misspellings and corrections will be output into the output collection. An example record is as follows:

You can export the result output into a CSV file for easy evaluation.

Usage of Misspelling Correction Results

The resulting corrections can be used in various ways. For example:

- Put misspellings into the synonym list to perform auto-correction. Please checkout our Synonyms Files manager in Fusion. (https://doc.lucidworks.com/fusion/3.0/Collections/Synonyms-Files.html)

- Help evaluate and guide the spell check configuration.

- Put misspellings into typeahead or autosuggest lists.

- Perform document cleansing (for example, clean a product catalog or medical records) by mapping misspellings to corrections.

Comparing Fusion’s spell check capabilities to the Solr spell checker, the advantages with this Fusion job are:

- Have basic Solr spell checker settings such as min prefix match, max edit distance, min length of misspelling, count thresholds of misspellings, and corrections.

- If signals are captured after the Solr spell checker was turned on, then these misspellings found from signals are mainly identifying erroneous corrections or no corrections from Solr.

- The job compares potential corrections based on multiple criteria more than just edit distance. User can easily configure the weights they want to put over each criterion.

- Rather than using a fixed max edit distance filter, we use an edit distance threshold relative to the query length to provide more wiggle room for long queries. Specifically, we apply a filter such that only pairs with edit_distance <= query_length/length_scale will be kept. For example, if we choose length_scale=4, for queries with lengths between 4 and 7, then the edit distance has to be 1 to be chosen. While for queries with lengths between 8 and 11, edit distance can be 2.

- Since the job is running offline, it can ease concerns of expensive spell check tasks from Solr spell check. For example, it does not limit the maximum number of possible matches to review (the maxInspections parameter in Solr) and is able to find comprehensive lists of spelling errors resulting from misplaced whitespace (breakWords in Solr)

- It allows offline human review to make sure the changes are all correct. If you have a dictionary (such as a product catalog) to check against the list, the job will go through the result list to make sure misspellings do not exist in the dictionary and corrections do exist in the dictionary.

Misspelling Correction Results Evaluation

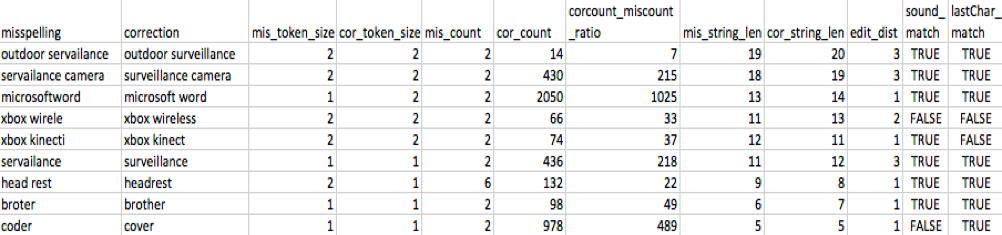

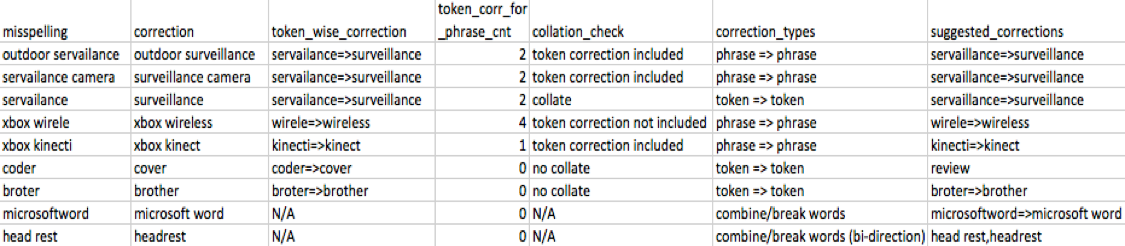

Above is an example result that has been exported to a CSV file. Several fields are provided to facilitate the reviewing process. For example, by default, results are sorted by “mis_string_len”, (descending) and “edit_dist” (ascending) to position more probable corrections at the top. Sound match or last character match are also good criteria to pay attention to. You can also sort by the ratio of correction traffic over misspelling traffic (the “corCount_misCount_ratio” field) to only keep high-traffic boosting corrections.

Several additional fields (as shown in the table above) are provided to disclose relationships among the token corrections and phrase corrections to help further reduce the list. The intuition is based on the idea of a Solr collation check, that is, a legitimate token correction is more likely to show up in phrase corrections. Specifically, for phrase misspellings, the misspelled tokens are separated out and put in the “token_wise_correction” field. If the associated token correction is already included in the one-word correction list, then the “collation_check” field will be labeled as “token correction included”, and the user can choose to drop those phrase misspellings to reduce duplications.

We also count how many such phrase corrections can be solved by the same token correction and put the number into the “token_corr_for_phrase_cnt” field. For example, if both “outdoor servailance” and “servailance camera” can be solved by correcting “servailance” to “surveillance”, then this number is 2, which provides some confidence for dropping such phrase corrections and further confirms that “servailance” to “surveillance” is legitimate. You may also see cases where the token-wise correction is not included in the list. For example, “xbow” to “xbox” is not included in the list since it can be dangerous to allow an edit distance of 1 in a word of length 4. But if multiple phrase corrections can be made by changing this token, then you can add this token correction to the list. (Note, phrase corrections with a value of 1 for “token_corr_for_phrase_cnt” and with “collation_check” labeled as “token correction not included” could be potentially-problematic corrections.)

On the other side for token corrections, attention can be paid to the pairs with short string length that show no collation in phrases. But it’s also possible that the token correction does not have its corresponding phrase level corrections appear in the signal or only show up in single-word queries. For example, “broter” was only used as a single-word query, thus there is no collation found in phrases. At last, we label misspellings due to misplaced whitespaces with “combine/break words” in the “correction_types” field. If there is a user-provided dictionary to check against, and both spellings are in the dictionary with and without whitespace in the middle, we can treat these pairs as bi-directional synonyms (” combine/break words (bi-direction)” in the “correction_types” field).

The good news here is that you don’t need to worry about the above rules for review. There is a field called “suggested_corrections” which explicitly provides suggestions about using token correction or the whole phrase correction. If the confidence of the correction is not high, then the job labels the pair as “review” in this field. You can pay special attention to the ones with the review labels.

If we use Solr spell check shipped with Fusion to tackle new and rare misspellings, and Fusion’s advanced spell check list to improve the correction accuracy for common misspellings, combined together, we can provide a better user search experience.

LEARN MORE

Contact us today to learn how Lucidworks can help your team create powerful search and discovery applications for your customers and employees.